Content from Spatially Resolved Transcriptomics in Life Sciences Research

Last updated on 2025-10-07 | Edit this page

Estimated time: 15 minutes

Overview

Questions

- What is spatial transcriptomics?

- What research questions or problems can spatial transcriptomics address?

- How do the technologies work?

- Which technology will we learn about in this lesson?

Objectives

- Describe why and how spatial transcriptomics can be used in research.

- Describe how spatial transcriptomics technology works.

- Describe how spatial transcriptomics addresses the limitations of single-cell or bulk RNA sequencing technologies.

Spatial transcriptomics in biomedical research

Investigating the organization of cells and tissues is fundamental to life sciences research. Cells and tissues situated in different regions of an organ can possess diverse functions and cell types. These cells in turn are influenced by varying tissue microenvironments, receiving and processing distinct information from that microenvironment. Co-located cells can communicate directly with one another through chemical and mechanical signals, responding to these signals with changes in their own state. Thus, knowing the spatial organization of cells in a tissue can reveal cell and tissue function.

Department of Histology, Jagiellonian University Medical College CC BY-SA 3.0 DEED

Spatially resolved transcriptomics describes spatial organization and cell signals, specifically gene expression signals. Spatial patterns of gene expression determine how genes are regulated within a tissue system and how those tissues and their component cells function. Spatial transcriptomic (ST) methods map cell position in a tissue, clarifying the physical relationships between cells and cellular structures. ST simultaneously measures gene expression, delivering valuable information about cell phenotype, state, and cell and tissue organization and function. The combination of cellular expression and position sheds light on signals a cell sends or receives through cell-to-cell interactions. Spatial information localizes cell signaling while delivering comprehensive gene expression profiling within tissues.

Fred the Oyster Public domain, via Wikimedia Commons CC BY-SA 4.0 DEED

Spatial transcriptomics addresses a key obstacle in single-cell and bulk RNA sequencing studies: their loss of spatial information. Spatial organization and structure determine function in most tissues and organs, so capturing both spatial and expression information is critical for understanding tissue function in neuroscience, immuno-oncology, developmental biology, and most other fields.

Spatial transcriptomics technologies

Spatial transcriptomics technologies broadly fall within two groups: imaging-based and sequencing-based methods. Both imaging- and sequence-based datasets are available through the The BRAIN Initiative - Cell Census Network. Sequencing-based datasets are featured in the Human Cell Atlas. These technologies vary in ability to profile entire transcriptomes, deliver single-cell resolution, and detect genes efficiently.

Imaging-based technologies

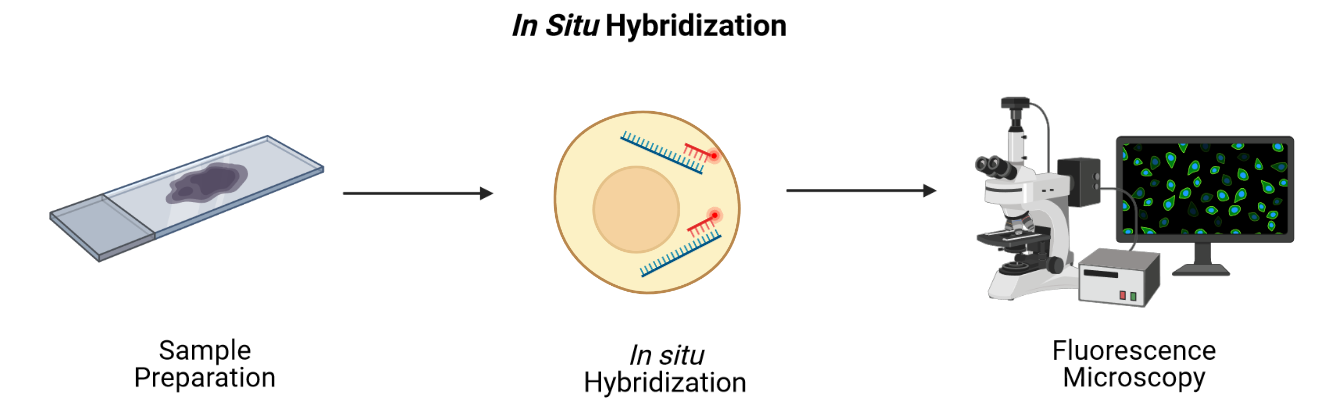

Imaging-based technologies read transcriptomes in place using microscopy at single-cell or even single-molecule resolution. They identify messenger RNA (mRNA) species fluorescence in situ hybridization (FISH), i.e., by hybridizing mRNA to gene-specific fluorescent probes.

Adapted from Spatial Transcriptomics Overview by SlifertheRyeDragon. Image created with Biorender.com. Public domain, via Wikimedia Commons CC BY-SA 4.0 DEED

RNA can be visualized in place in the original tissue environment by hybridizing labeled probes to their specific targets. Current FISH methods employ multiple hybridization rounds, with risk of error for each transcript growing exponentially with each round. FISH methods are limited in the size of tissue that they can profile and most are applicable only to fresh-frozen (FF) tissue. They can also be time-consuming and expensive due to microscopic imaging they require. Since they target specific genes, they can only detect genes that are in the probe set. They have high spatial resolution though, even delivering single-molecule resolution in single-molecule FISH (smFISH). Even technologies that can profile hundreds or thousands of mRNA transcripts simultaneously, though, target throughput is low and spectral overlap of fluorophores complicates microscopy.

Conventional FISH methods have few distinct color channels that limit the number of genes that can be simultaneously analyzed. Multiplexed error-robust FISH (MERFISH) overcomes this problem, greatly increasing the number of RNA species that can be simultaneously imaged in single cells by using binary code gene labeling in multiple rounds of hybridization.

SlifertheRyeDragon, CC BY-SA 4.0, via Wikimedia Commons

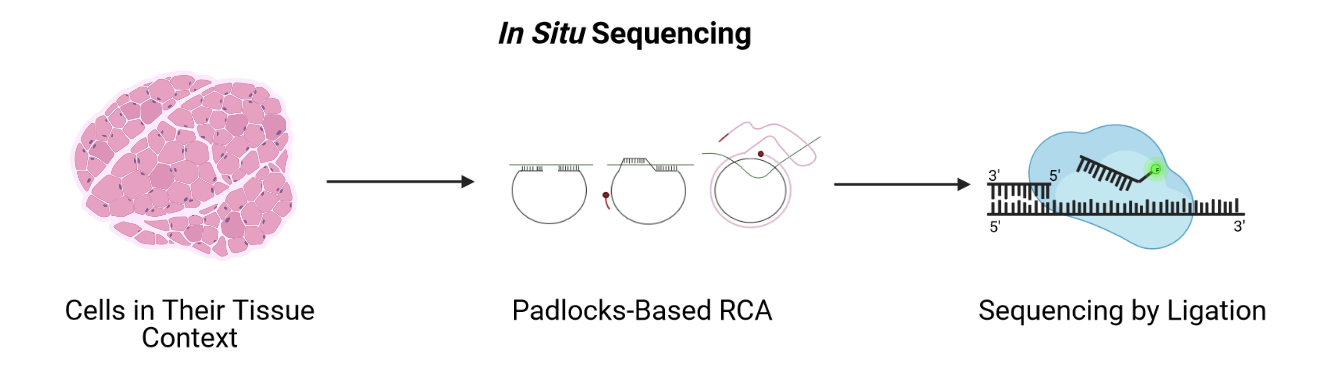

A second imaging-based method, in situ sequencing, amplifies and sequences mRNAs directly within a block or section of fresh-frozen (FF) or formalin-fixed paraffin embedded (FFPE) tissue.

Adapted from Spatial Transcriptomics Overview by SlifertheRyeDragon.

Image created with Biorender.com. Public domain, via Wikimedia Commons

CC

BY-SA 4.0 DEED

Adapted from Spatial Transcriptomics Overview by SlifertheRyeDragon.

Image created with Biorender.com. Public domain, via Wikimedia Commons

CC

BY-SA 4.0 DEED

Messenger RNA (mRNA) is reverse transcribed to complementary DNA (cDNA) within tissue sections. A padlock probe binds to the cDNA, which is then circularized. Following circularization, the cDNA is amplified by rolling-circle amplification (RCA), then sequenced by ligation for decoding. Probes profile one or two bases at a time using different fluorophores, eventually revealing the identity of the cDNA through imaging. Since it requires imaging, in situ sequencing is an imaging-based method even though it involves sequencing. In situ sequencing can accommodate larger tissue sections than can FISH, though FISH methods are more efficient at detecting mRNA of genes in the probe set. Like FISH, in situ sequencing requires considerable imaging time on a microscope but delivers high spatial resolution. Both methods require a priori knowledge of target mRNA.

Sequencing-based technologies

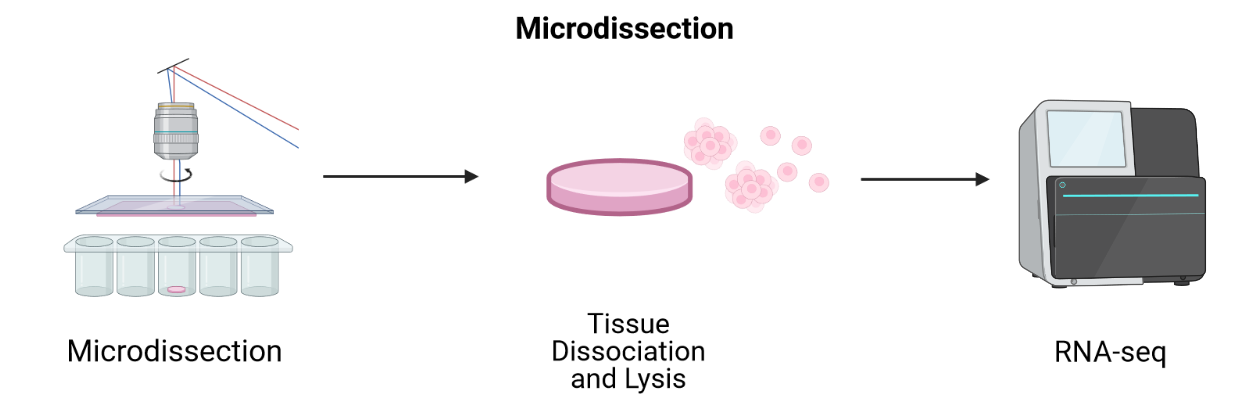

Sequencing-based methods capture, sequence, and count mRNA using next-generation sequencing while retaining positional information. This is distinct from in situ sequencing because next-generation sequencing is employed. Sequencing-based methods may be unbiased, in which they capture the entire transcriptome, or probe-based, in which they typically capture the majority of protein-coding genes. Sequencing-based methods retain spatial information through laser-capture microdissection (LCM), microfluidics, or through ligation of mRNAs to arrays of barcoded probes that record position.

LCM-based methods employ lasers to cut a tissue slice or fuse tissue to a membrane followed by sequencing of individual cells.

Adapted from Spatial Transcriptomics Overview by SlifertheRyeDragon.

Image created with Biorender.com. Public domain, via Wikimedia Commons

CC

BY-SA 4.0 DEED

Adapted from Spatial Transcriptomics Overview by SlifertheRyeDragon.

Image created with Biorender.com. Public domain, via Wikimedia Commons

CC

BY-SA 4.0 DEED

LCM techniques process tissue sections for transcriptomic profiling by isolating regions of interest. They are useful for profiling transcriptomes as a first pass and for identifying RNA isoforms, but their blunt approach to capturing spatial expression data limits spatial resolution and requires many samples for sequencing. Since they focus on regions of interest, it is difficult to grasp spatial expression across a whole tissue. LCM is an older technology that has long been used with FFPE tissues. Modern LCM-based approaches include Nanostring’s GeoMx DSP and STRP-seq.

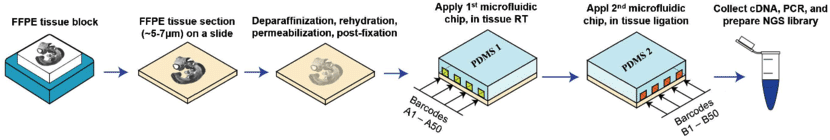

Microfluidics places a chip with multiple barcode-containing channels onto a tissue section followed by a second chip with channels perpendicular to the first. The barcodes are then ligated to each other to create an array of unique barcodes on the tissue. This deterministic barcoding is employed in DBiT-seq. DBiT-seq can be used with FFPE tissues. This approach is helpful to avoid diffusion of mRNA away from capture areas, though a disadvantage is that cells often sit astride multiple capture areas.

Adapted from

Liu

Y, Enninful A, Deng Y, & Fan R (2020). Spatial transcriptome

sequencing of FFPE tissues at cellular level. Preprint.

CC

BY-SA 4.0 DEED

Adapted from

Liu

Y, Enninful A, Deng Y, & Fan R (2020). Spatial transcriptome

sequencing of FFPE tissues at cellular level. Preprint.

CC

BY-SA 4.0 DEED

Other array-based methods capture mRNA with spatially-barcoded probes and sequence them. They can profile larger tissue sections than can FISH or in situ sequencing and they don’t rely on time-consuming microscopic imaging. Spatial resolution is lower, however.

In this lesson we will use data from positionally barcoded arrays.

James Chell, Spatial transcriptomics ii, CC BY-SA 4.0

| Technology | Gene detection efficiency | Transcriptome-wide profiling | Spatial resolution | Tissue area |

|---|---|---|---|---|

| FISH | + | - | + | - |

| In situ sequencing | - | - | + | + |

| LCM-based | + | + | - | - |

| Microfluidics | - | + | - | + |

| Array-based | - | + | - | + |

Table 1. Relative strengths and weaknesses of spatial transcriptomics technologies by general category.

This introduction to the technologies is intended to help you navigate a complex technological landscape so that you can learn more about existing technologies. It is not intended to be comprehensive.

The diversity in spatial transcriptomics technologies is enormous and rapidly developing. If you would like to learn more about spatial transcriptomics technologies, please see the list of references located here.

Discussion: Which technology is right for your research?

Would an imaging-based or a sequencing-based solution be preferable for your research? Why?

From the descriptions above, which technology do you think would best suit your research? Would you use fresh-frozen (FF) or formalin-fixed tissues embedded in paraffin (FFPE)? Even if your institution does not offer service using a specific technology, describe which best suits your research and why you think it’s best suited.

Which technology you use depends on your experimental aim. If you are testing hypotheses about specific genes, you can profile those genes at high resolution and sensitivity with an imaging-based method. If instead you aim to generate hypotheses, next generation sequencing-based methods that profile many genes in an unbiased manner would be best.

10X Genomics Visium technology

In this lesson we will use data from an array-based method called Visium that is offered by 10X Genomics. Visium is an upgrade and commercialization of the spatial transcriptomics method described in 2016 in Science, 353(6294) and illustrated in general in the figure above. A more specific schematic is given below.

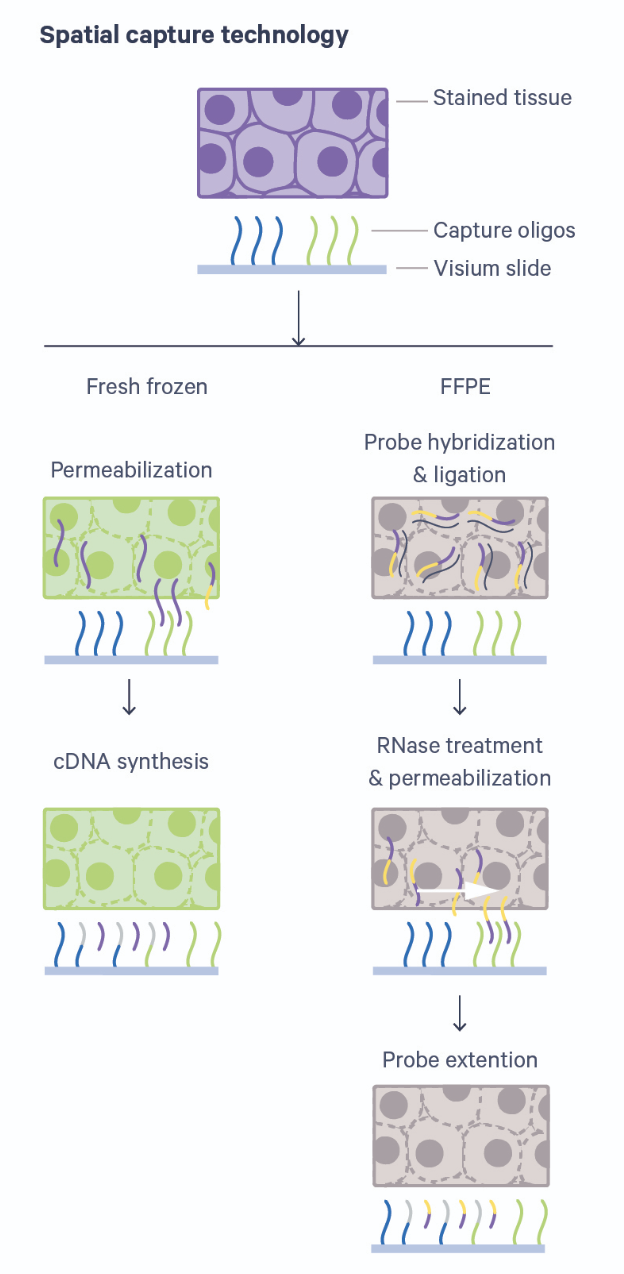

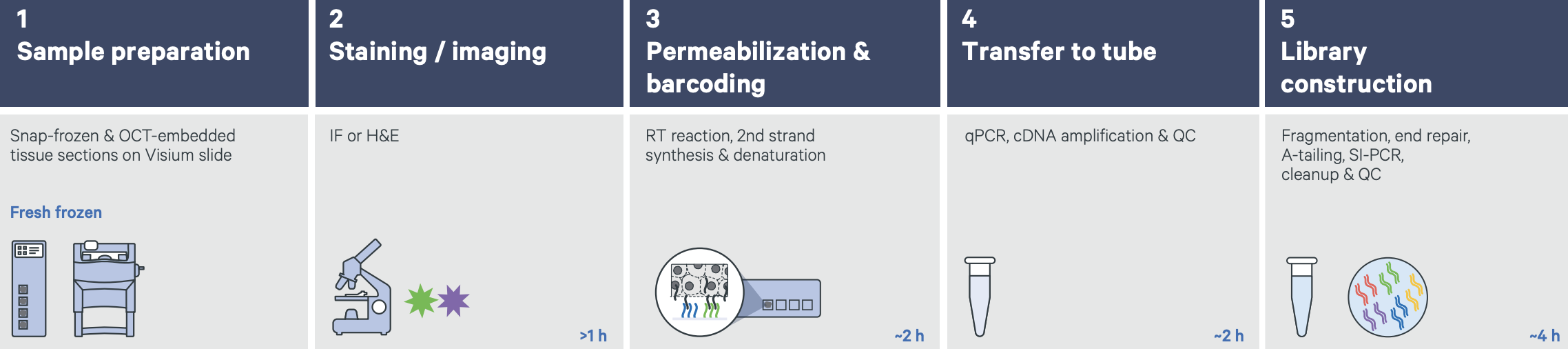

Thin tissue sections are placed atop spots printed with spatial barcodes. For fresh-frozen (FF) tissues, tissue fixing and permeabilization discharges mRNA to bind with spatially barcoded probes that indicate the position on the slide. The assay is sensitive to permeabilization time, which is often optimized in a separate experimental procedure. Captured mRNA is then reverse transcribed to cDNA and sequencing libraries created from these. The formalin-fixed paraffin embedded (FFPE) assay utilizes probe pairs that identify each gene in the probe set and capture target genes by hybridizing to them. Permeabilization discharges the captured mRNA to spatially barcoded probes on the slide, but does not require a separate optimization step as in the FF protocol. The capture mRNA is then extended to include complements of the spatial barcodes. Sequencing libraries are then formed from the captured and barcoded mRNA.

Spatial transcriptomics combines two key modes: histological imaging and gene expression profiling. Histological imaging captures tissue morphology with standard staining protocols while expression profiling is captured by sequencing spatially barcoded cDNA.

Sequencing-based datasets have grown faster than have imaging-based datasets, with Visium dominating published datasets. Unlike most other sequencing-based technologies, Visium accommodates both FF or FFPE tissue. Each spot provides average gene expression measurements from between one to a few tens of cells, approaching single-cell resolution. Average gene expression measurements are combined with histological images that couple molecular detail and tissue morphology and structure.

- Spatial transcriptomics provides the location of cells relative to neighboring cells and cell structures.

- A cell’s location is useful data for describing its phenotype, state, and cell and tissue function.

- Spatial transcriptomics addresses a key obstacle in bulk and single-cell RNA sequencing studies: their loss of spatial information.

- The main goal of spatial transcriptomics studies is to integrate expression with spatial information.

Content from Data and Study Design

Last updated on 2025-10-07 | Edit this page

Estimated time: 30 minutes

Overview

Questions

- Which data will we explore in this course?

- How was the study that generated the data designed?

- What are some critical design elements for rigorous, reproducible spatial transcriptomics experiments?

Objectives

- Describe a spatial transcriptomics experiment.

- Identify important elements for good experimental design.

The Data

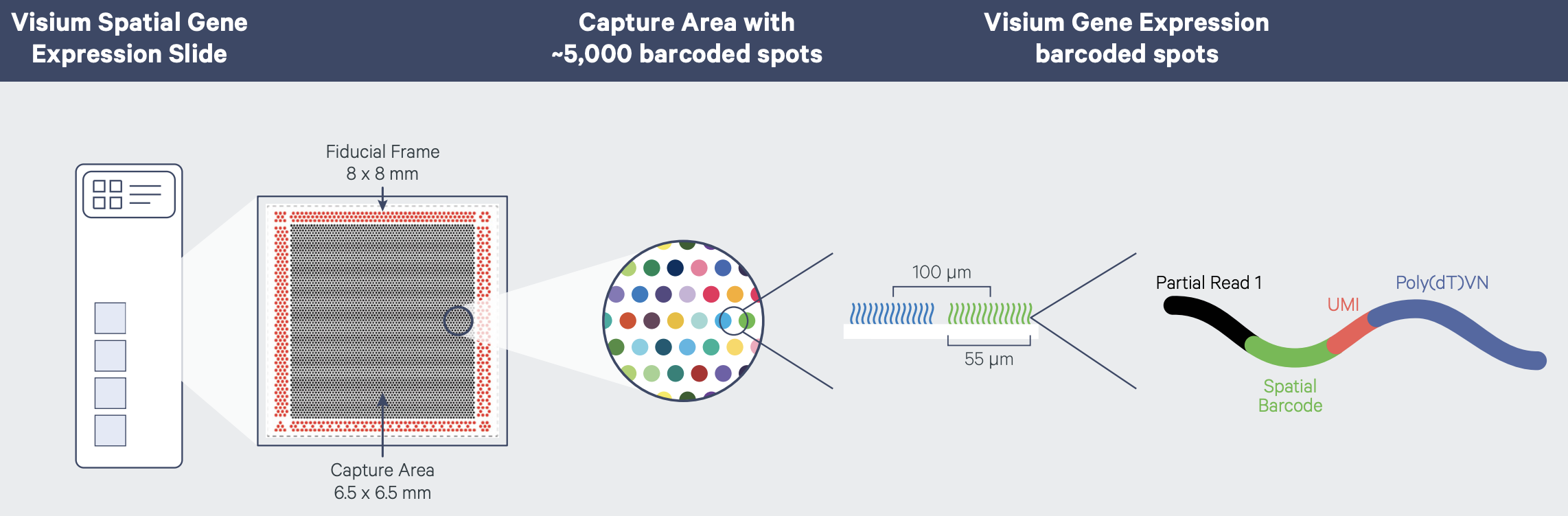

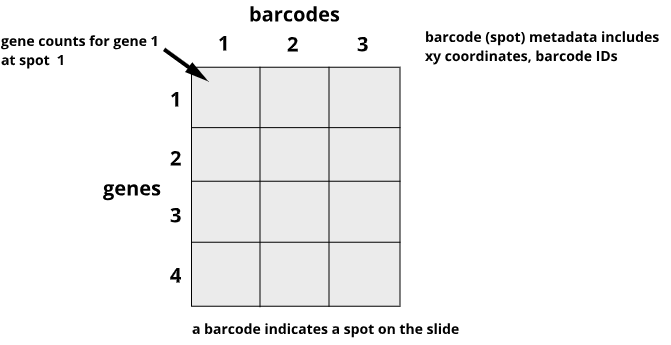

Recall that tissue is laid on a glass slide containing spots with primers to capture mRNA. The graphic below details a Visium slide with four capture areas. Each capture area has arrays of barcoded spots containing oligonucleotides. The oligonucleotides each contain a poly(dT) sequence for capture of polyadenylated molecules, a unique molecular identifier (UMI) to identify duplicate molecules, a spatial barcode shared by all oligonucleotides within the same spot, and a partial read for library preparation and sequencing.

In spatial transcriptomics the barcode indicates the x-y coordinates of the spot. Barcodes are generic identifiers that identify different things in different technologies. A barcode in single-cell transcriptomics, for example, refers to a single cell, not to a spot on a slide. When you see barcodes in ST data, think spot, not single cell. In fact, one spot can capture mRNA from many cells. This is a feature of ST experiments that is distinct from single-cell transcriptomics experiments. As a result, many single-cell methods won’t work with ST data. Later we will look at methods to deconvolve cell types per spot to determine the number and types of cells in each spot. Spots can contain zero, one, or many cells.

The graphic below shows a Visium workflow for fresh-frozen tissues.

Graphic from

Grant

application resources for Visium products at 10X Genomics

Graphic from

Grant

application resources for Visium products at 10X Genomics

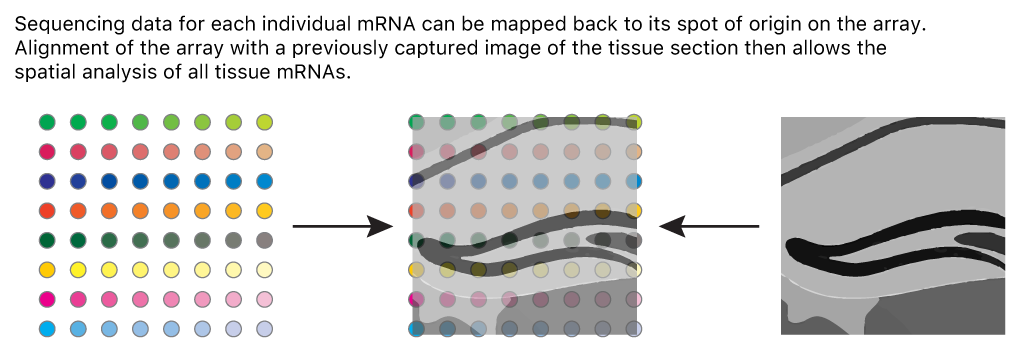

Count data for each mRNA are mapped back to spots on the slide to indicate the tissue position of gene expression. An image of the tissue overlaid on the array of spots pinpoints spatial gene expression in the tissue.

Adapted from James Chell, Spatial transcriptomics ii, CC BY-SA 4.0

Data from the 10X Genomics Visium platform contain gene identifiers in rows and barcode identifiers in columns. In the graphic below, row 1 of column 1 contains the mRNA counts for gene 1 at barcode (spot) 1.

Challenge 1: Row and column sums

What does the sum of a single row signify?

What does the sum of a single column signify?

The row sum is the total expression of one gene across all spots on the slide.

R

sum('data[1, ]')

The column sum is the total expression of all genes for one spot on the slide.

R

sum('data[ , 1]')

Design and Motivation of Prefrontal Cortex Study

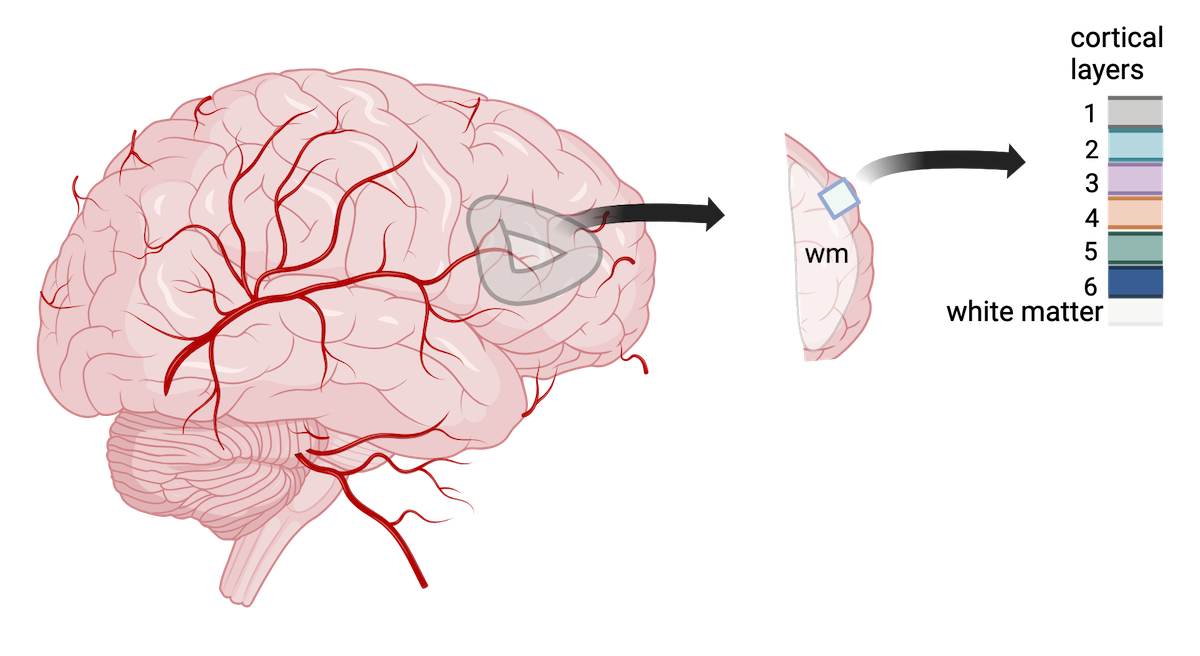

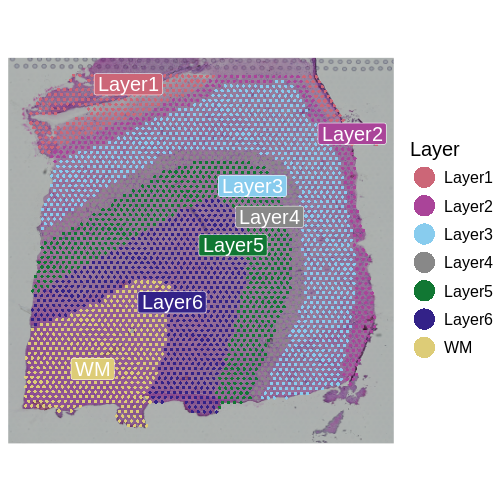

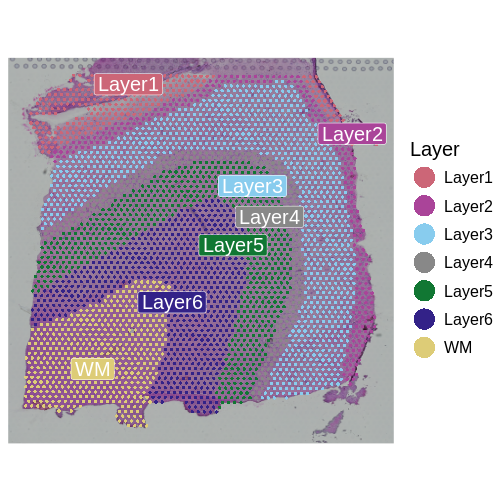

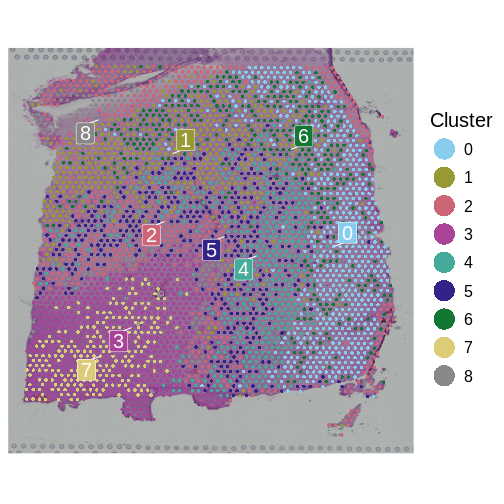

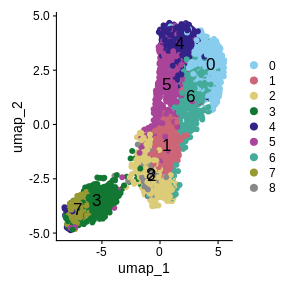

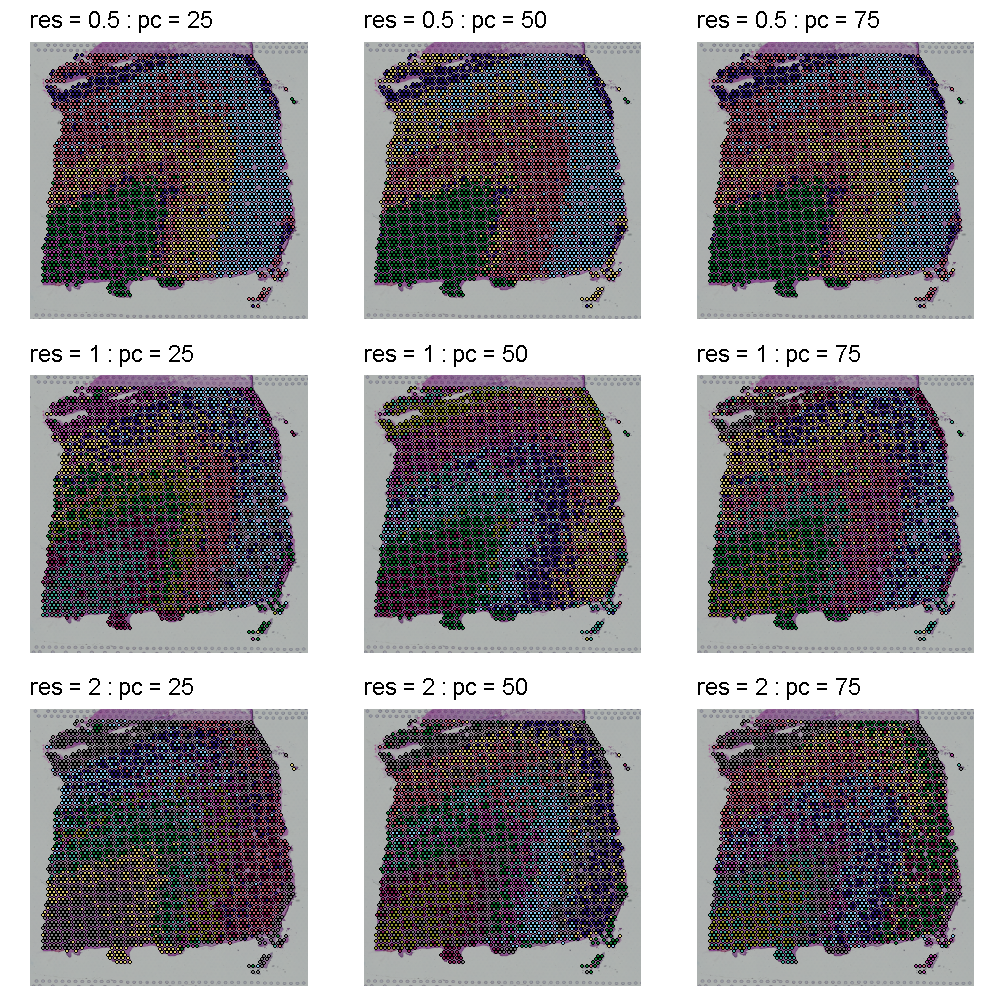

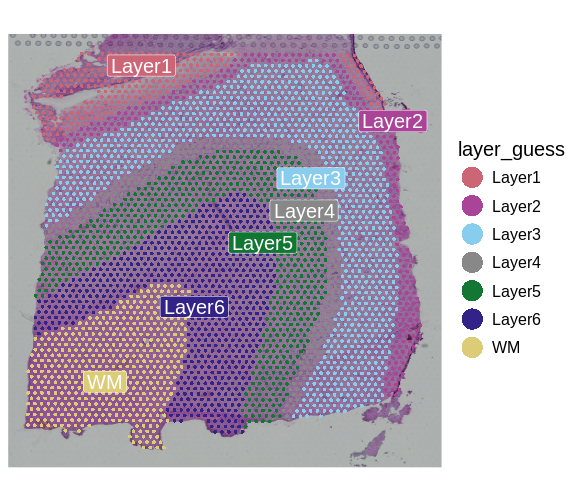

We will use data from a study of the human prefrontal cortex published in Transcriptome-scale spatial gene expression in the human dorsolateral prefrontal cortex by Maynard et al, Nat Neurosci 24, 425–436 (2021). This region of the brain, involved in higher-order cognition and managing thoughts and actions in conformance with internal goals, is particularly amenable to spatial analyses because its structure is intimately tied to its function. Specifically, the six cortical layers and the white matter shown below are comprised of cells with distinct gene expression profiles and differing patterns of morphology, physiology, and connectivity.

Adapted from Maynard et al, Nat Neurosci 24, 425–436 (2021). Created with BioRender.com.

The dorsolateral prefrontal cortex is implicated in some neuropsychiatric disorders such as autism spectrum disorder (ASD) and schizophrenia disorder (SCZD), and differences in gene expression and pathology are located in specific cortical layers. Localizing gene expression at cellular resolution within the six layers can illuminate disease mechanisms and brain development. As such, the authors aimed to map gene expression to the spatial organization of the six cortical layers. This course will largely follow their analyses in defining the gene expression markers of the cortical layers.

Spatial transcriptomics has several advantages relative to other technologies in meeting the authors’ objective. Owing to their large size and fragility, human neurons are difficult to isolate with scRNA-seq. This has motivated the use of single-nucleus(sn)RNA-seq in most studies. Unfortunately, this modality fails to capture cytoplasmic compartments of the cell, as well as axons and dendrites, and the genes within these regions have been associated with SCZD and ASD. Laser capture microdissection followed by sequencing does capture all cellular compartments, but can not be use to detect spatial gradients of gene expression since it removes tissues from their spatial context. Spatial transcriptomics offers the ability both to capture the entire repertoire of cells resident in the brain and to establish the expression gradient across them within the spatial context of the intact tissue.

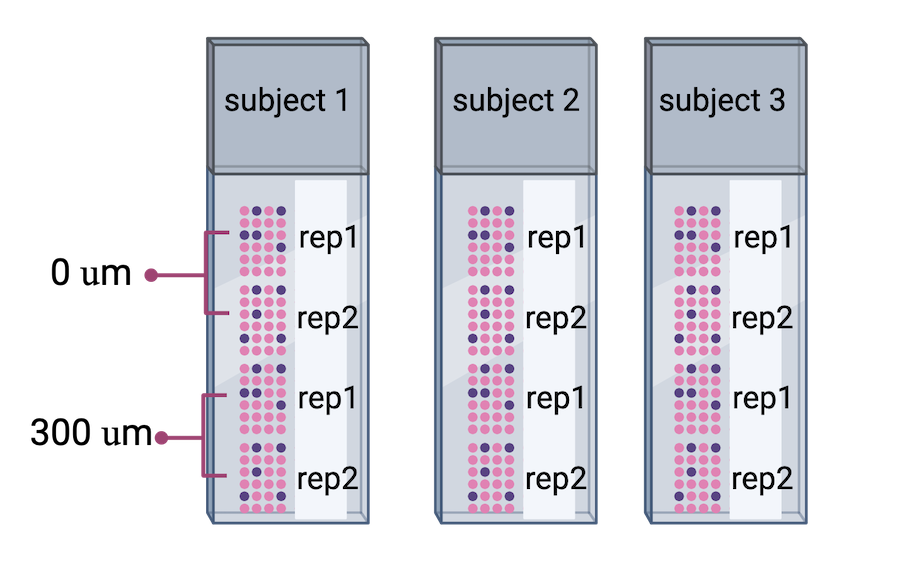

The authors proceeded in their spatial transcriptomics study by selecting wwo pairs of spatially adjacent replicates from three neurotypical donors. The second pair of replicates was taken from 300 microns posterior to the first pair of replicates.

Adapted from

Maynard et al, Nat

Neurosci 24, 425–436 (2021).

Created with BioRender.com.

Adapted from

Maynard et al, Nat

Neurosci 24, 425–436 (2021).

Created with BioRender.com.

Challenge 2: What do you notice?

What do you notice about the experimental design that might create issues during data analysis? Why might the authors have done the experiment this way? Is there anything that can be done about this?

Human brain tissues start to deteriorate at death. Brain banks that provide tissue for studies requiring intact mRNA will quickly remove the brain and rapidly weigh, examine, dissect and freeze it to optimize mRNA integrity. For this study, dorsolateral prefrontal cortex samples were embedded in a medium (see Methods section) and then cryosectioned. Sections were then placed on chilled Visium slides, fixed and stained.

This whole process might have depended on donor availability. All three donors were neurotypical, and it’s not clear how they died (e.g., an accident, a terminal illness, old age) and how that might have impacted availability or mRNA quality. Suffice it to say that it might not have been possible to predict when samples would be available, so it might not have been possible to randomize the samples from each donor to different Visium slides.

Sometimes you might have to confound variables in your study due to sample availability or other factors. The key thing is to know that confounding has occurred.

Important considerations for rigorous, reproducible experiments

Good experimental design plays a critical role in obtaining reliable and meaningful results and is an essential feature of rigorous, reproducible experiments. Designed experiments aim to describe and explain variability under experimental conditions. Variability is natural in the real world. A medication given to a group of patients will affect each of them differently. A specific diet given to a cage of mice will affect each mouse differently. Ideally if something is measured many times, each measurement will give exactly the same result and will represent the true value. This ideal doesn’t exist in the real world. Variability is a feature of natural systems and also a natural part of every experiment we undertake.

Replication

To figure out whether a difference in responses is real or inherently random, replication applies the same treatment to multiple experimental units. The variability of the responses within a set of replicates provides a measure against which we can compare differences among different treatments. Experimental error describes the variability in the responses. Random variation (a.k.a random error or noise) reflects imprecision, but not inaccuracy. Larger sample sizes reduce this imprecision.

In addition to random (experimental) error, systematic error or bias occurs when there are deviations in measurements or observations that consistently either overestimate or underestimate the true value. As an example, a scale might be calibrated so that mass measurements are consistently too high or too low. Unlike random error, systematic error is consistent in one direction, is predictable and follows a pattern. Larger sample sizes don’t correct for systematic bias; equipment or measurement calibration does. Technical replicates define this systematic bias by running the same sample through the machine or measurement protocol multiple times to characterize the variation caused by equipment or protocols.

A biological replicate measures different biological samples in parallel to estimate the variation caused by the unique biology of the samples. The sample or group of samples are derived from the same biological source, such as cells, tissues, organisms, or individuals. Biological replicates assess the variability and reproducibility of experimental results. The greater the number of biological replicates, the greater the precision (the closeness of two or more measurements to each other). Having a large enough sample size to ensure high precision is necessary to ensure reproducible results. Note that increasing the number of technical replicates will not help to characterize biological variability! It is used to characterize systematic error, not experimental error.

Challenge 3: Which kind of error?

A study used to determine the effect of a drug on weight loss could

have the following sources of experimental error. Classify the following

sources as either biological, systematic, or random error.

1). A scale is broken and provides inconsistent readings.

2). A scale is calibrated wrongly and consistently measures mice 1 gram

heavier.

3). A mouse has an unusually high weight compared to its experimental

group (e.g., it is an outlier).

4). Strong atmospheric low pressure and accompanying storms affect

instrument readings, animal behavior, and indoor relative humidity.

1). random, because the scale is broken and provides any kind of

random reading it comes up with (inconsistent reading)

2). systematic

3). biological

4). random or systematic; you argue which and explain why

Challenge 4: How many technical and biological replicates?

In each scenario described below, identify how many technical and how many biological replicates are represented. What conclusions can be drawn about experimental error in each scenario?

1). One person is weighed on a scale five times.

2). Five people are weighed on a scale one time each.

3). Five people are weighed on a scale three times each.

4). A cell line is equally divided into four samples. Two samples

receive a drug treatment, and the other two samples receive a different

treatment. The response of each sample is measured three times to

produce twelve total observations. In addition to the number of

replicates, can you identify how many experimental units there

are?

5). A cell line is equally divided into two samples. One sample receives

a drug treatment, and the other sample receives a different treatment.

Each sample is then further divided into two subsamples, each of which

is measured three times to produce twelve total observations. In

addition to the number of replicates, can you identify how many

experimental units there are?

1). One biological sample (not replicated) with five technical

replicates. The only conclusion to be drawn from the measurements would

be better characterization of systematic error in measuring. It would

help to describe variation produced by the instrument itself, the scale.

The measurements would not generalize to other people.

2). Five biological replicates with one technical measurement (not

replicated). The conclusion would be a single snapshot of the weight of

each person, which would not capture systematic error or variation in

measurement of the scale. There are five biological replicates, which

would increase precision, however, there is considerable other variation

that is unaccounted for.

3). Five biological replicates with three technical replicates each. The

three technical replicates would help to characterize systematic error,

while the five biological replicates would help to characterize

biological variability.

4). Four biological replicates with three technical replicates each. The

three technical replicates would help to characterize systematic error,

while the four biological replicates would help to characterize

biological variability. Since the treatments are applied to each of the

four samples, there are four experimental units.

5). Two biological replicates with three technical replicates each.

Since the treatments are applied to only the two original samples, there

are only two experimental units.

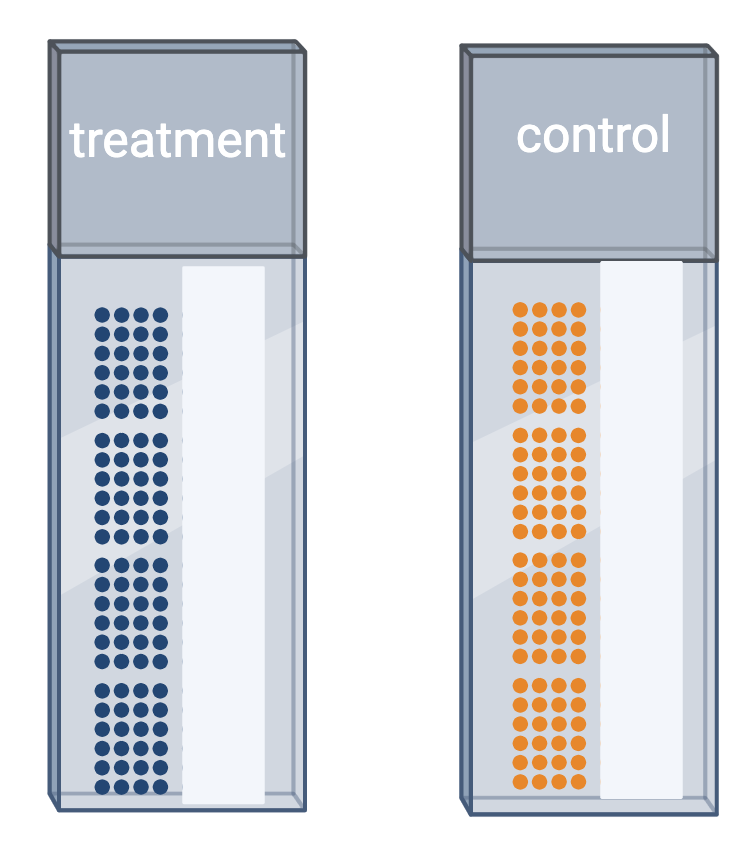

Randomization

Randomization minimizes bias, moderates experimental error (a.k.a. noise), and ensures that our comparisons between treatment groups are valid. Randomized studies assign experimental units to treatment groups randomly by pulling a number out of a hat or using a computer’s random number generator. The main purpose for randomization comes later during statistical analysis, where we compare the data we have with the data distribution we might have obtained by random chance. Random assignment (allocation) of experimental units to treatment groups prevents the subjective bias that might be introduced by an experimenter who selects, even in good faith and with good intention, which experimental units should get which treatment.

Randomization also accounts for or cancels out effects of “nuisance” variables like the time or day of the experiment, the investigator or technician, equipment calibration, exposure to light or ventilation in animal rooms, or other variables that are not being studied but that do influence the responses. Randomization balances out the effects of nuisance variables between treatment groups by giving an equal probability for an experimental unit to be assigned to any treatment group.

Challenge 5: Treatment and control samples

You plan to place samples of treated tissue on one slide and samples

of the controls on another slide. What will happen when it is time for

data analysis? What could you have done differently?

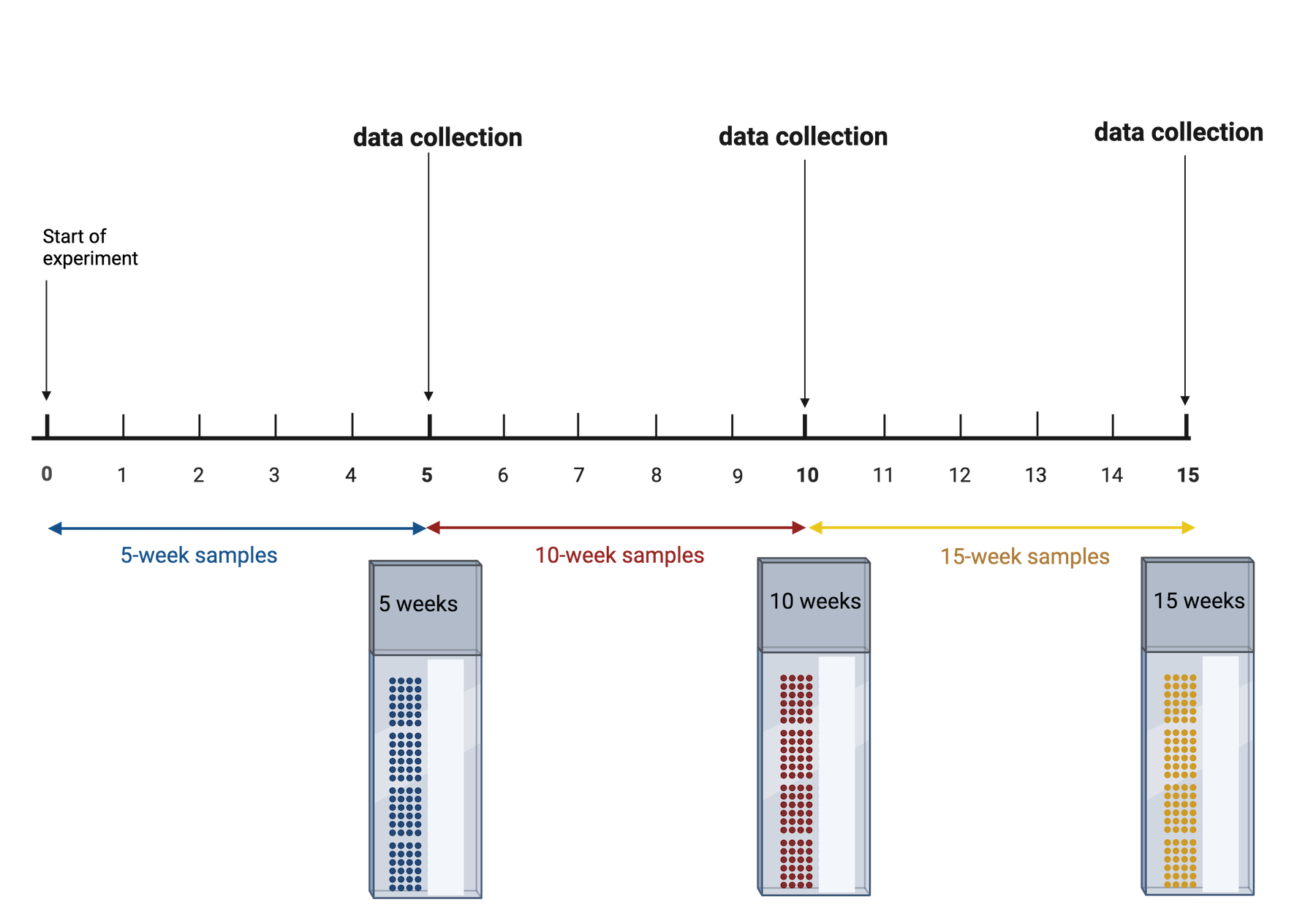

Challenge 6: Time points

Your study requires data collection at three time points: 5, 10, and 15 weeks. At the end of 5 weeks, you will run samples through the entire Visium workflow. You will repeat this for the 10- and 15-week samples when each of those time points is reached. What will happen when it is time for data analysis? What could you have done differently?

The issue is that time point is now confounded. A better approach would be to start the 15-week samples, then 5 weeks later start the 10-week samples, then 5 weeks later start the 5-week samples. This way you can run all of your samples at the same time. None of your samples will have spent a long time in the freezer, so you won’t need to worry about the variation that might cause. You won’t need to worry about the time point confounding the results.

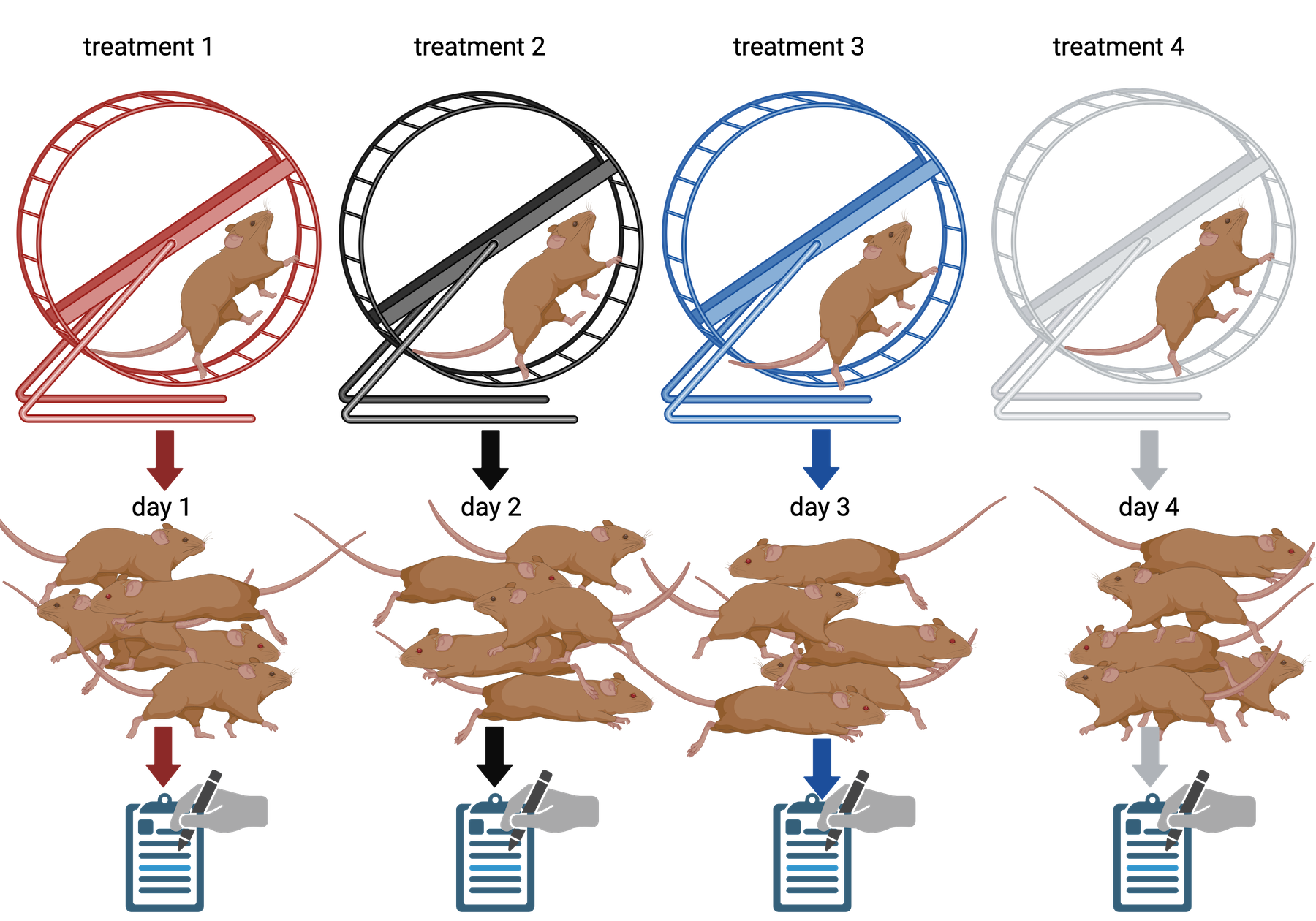

Challenge 7: The efficient technician

Your technician colleague finds a way to simplify and expedite an

experiment. The experiment applies four different wheel-running

treatments to twenty different mice over the course of five days. Four

mice are treated individually each day for two hours each with a random

selection of the four treatments. Your clever colleague decides that a

simplified protocol would work just as well and save time. Run treatment

1 five times on day 1, treatment 2 five times on day 2, and so on. Some

overtime would be required each day but the experiment would be

completed in only four days, and then they can take Friday off! Does

this adjustment make sense to you?

Can you foresee any problems with the experimental results?

Since each treatment is run on only one day, the day effectively becomes the experimental unit (explain this). Each experimental unit (day) has five samples (mice), but only one replication of each treatment. There is no valid way to compare treatments as a result. There is no way to separate the treatment effect from the day-to-day differences in environment, equipment setup, personnel, and other extraneous variables.

Statistical power

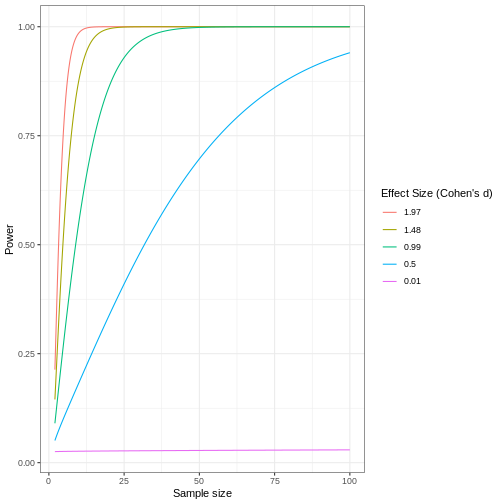

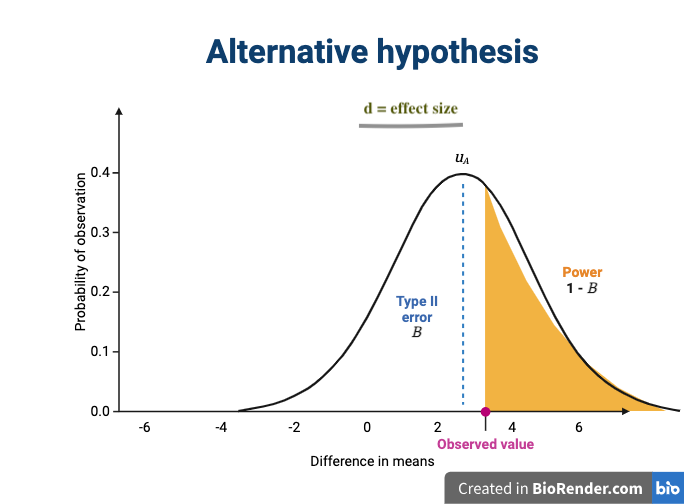

Statistical power represents the probability of detecting a real treatment effect. Review the following figure to explore the relationships between effect size, sample size, and power. What is the relationship between effect size and sample size? Between sample size and power?

Adapted from How to Create Power Curves in ggplot by Levi Baguley

Notice that to detect a standardized effect size of 0.5 at 80% power, you would need a sample size of approximately 70. Larger effect sizes require much smaller sample sizes. Very small effects such as .01 never reach the 80% power threshold without enormous samples sizes in the hundreds of thousands.

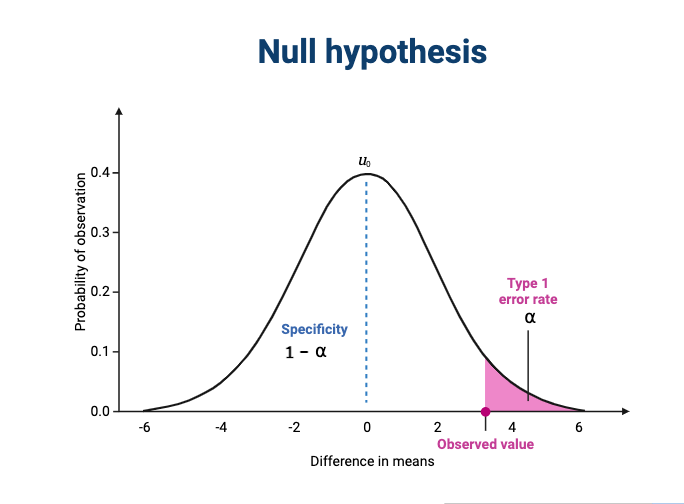

The effect size is shown in the figure above as the difference in means between the null and alternative hypotheses. Statistical power, also known as sensitivity, is the power to detect this effect.

To learn more about statistical power, effect sizes and sample size calculations, see Power and sample size by Krzywinski & Altman , Nature Methods 10, pages 1139–1140 (2013).

- Use

.mdfiles for episodes when you want static content - Use

.Rmdfiles for episodes when you need to generate output - Run

sandpaper::check_lesson()to identify any issues with your lesson - Run

sandpaper::build_lesson()to preview your lesson locally

Content from Data Preprocessing

Last updated on 2025-10-07 | Edit this page

Estimated time: 95 minutes

Overview

Questions

- What data files should I expect from the Visium assay?

- Which data preprocessing steps are required to prepare the raw data files for further analysis?

- What software will we use for data preprocessing?

Objectives

- Explain how to use markdown with the new lesson template

- Demonstrate how to include pieces of code, figures, and nested challenge blocks

Introduction

The Space Ranger

software is a popular, though by no means only, set of pipelines for

preprocessing of Visium data. We focus on it here. It provides the

following output:

| File Name | Description |

|---|---|

| web_summary.html | Run summary metrics and plots in HTML format |

| cloupe.cloupe | Loupe Browser visualization and analysis file |

| spatial/ | Folder containing outputs that capture the spatiality of the data. |

| spatial/aligned_fiducials.jpg | Aligned fiducials QC image |

| spatial/aligned_tissue_image.jpg | Aligned CytAssist and Microscope QC image. Present only for CytAssist workflow |

| spatial/barcode_fluorescence_intensity.csv | CSV file containing the mean and standard deviation of fluorescence intensity for each spot and each channel. Present for the fluorescence image input specified by –darkimage |

| spatial/cytassist_image.tiff | Input CytAssist image in original resolution that can be used to re-run the pipeline. Present only for CytAssist workflow |

| spatial/detected_tissue_image.jpg | Detected tissue QC image. |

| spatial/scalefactors_json.json | Scale conversion factors for spot diameter and coordinates at various image resolutions |

| spatial/spatial_enrichment.csv | Feature spatial autocorrelation analysis using Moran’s I in CSV format |

| spatial/tissue_hires_image.png | Downsampled full resolution image. The image dimensions depend on the input image and slide version |

| spatial/tissue_lowres_image.png | Full resolution image downsampled to 600 pixels on the longest dimension |

| spatial/tissue_positions.csv | CSV containing spot barcode; if the spot was called under (1) or out (0) of tissue, the array position, image pixel position x, and image pixel position y for the full resolution image |

| analysis/ | Folder containing secondary analysis data including graph-based clustering and K-means clustering (K = 2-10); differential gene expression between clusters; PCA, t-SNE, and UMAP dimensionality reduction. |

| metrics_summary.csv | Run summary metrics in CSV format |

| probe_set.csv | Copy of the input probe set reference CSV file. Present for Visium FFPE and CytAssist workflow |

| possorted_genome_bam.bam | Indexed BAM file containing position-sorted reads aligned to the genome and transcriptome, annotated with barcode information |

| possorted_genome_bam.bam.bai | Index for possorted_genome_bam.bam. In cases where the reference transcriptome is generated from a genome with very long chromosomes (>512 Mbp), Space Ranger v2.0+ generates a possorted_genome_bam.bam.csi index file instead. |

| filtered_feature_bc_matrix/ | Contains only tissue-associated barcodes in MEX format. Each element of the matrix is the number of UMIs associated with a feature (row) and a barcode (column). This file can be input into third-party packages and allows users to wrangle the barcode-feature matrix (e.g. to filter outlier spots, run dimensionality reduction, normalize gene expression). |

| filtered_feature_bc_matrix.h5 | Same information as filtered_feature_bc_matrix/ but in HDF5 format. |

| raw_feature_bc_matrices/ | Contains all detected barcodes in MEX format. Each element of the matrix is the number of UMIs associated with a feature (row) and a barcode (column). |

| raw_feature_bc_matrix.h5 | Same information as raw_feature_bc_matrices/ in HDF5 format. |

| | raw_probe_bc_matrix.h5 |

| molecule_info.h5 | Contains per-molecule information for all molecules that contain a valid barcode, valid UMI, and were assigned with high confidence to a gene or protein barcode. This file is required for additional analysis spaceranger pipelines including aggr, targeted-compare and targeted-depth. |

Fortunately, you will not need to look at all of these files. We provide a brief description for you in case you are curious or need to look at one of the files for technical reasons.

The two files that you will use are

raw_feature_bc_matrix.h5 and

filtered_feature_bc_matrix.h5. These files have an

h5 suffix, which means that they are HDF5 files. HDF5 is

a compressed file format for storing complex high-dimensional data. HDF5

stands for Hierarchical Data Formats, version 5. There is an R

package designed to read and write HDF5 files called rhdf5.

This was one of the packages which you installed during the lesson

setup.

Briefly, HDF5 organizes data into directories within the

compressed file. There are three “files” within the HDF5

file:

| File Name | Description |

|---|---|

| features.csv | Contains the features (i.e. genes in this case) for each row in the data matrix. |

| barcodes.csv | Contains the probe barcodes for each spot on the tissue block. |

| matrix.mtx | Contains the counts for each gene in each spot. Features (e.g. genes) are in rows and barcodes (e.g. spots) are in columns. |

Set up Environment

Go to the File menu and select

Open Project. Open the spatialRNA project

which you created in the workshop setup.

First, we will load in some utility functions to make our lives a bit

easier. The source

function reads an R file and runs the code in it. In this case, this

will load several useful functions.

We will then load the libraries that we need for this lesson.

R

suppressPackageStartupMessages(library(tidyverse))

suppressPackageStartupMessages(library(hdf5r))

suppressPackageStartupMessages(library(Seurat))

source("https://raw.githubusercontent.com/smcclatchy/spatial-transcriptomics/main/code/spatial_utils.R")

Load Raw and Filtered Spatial Expression Data

In this course, we will use the Seurat R environment, which was

originally designed for analysis of single-cell RNA-seq data, but has

been extended for spatial transcriptomics data. The Seurat website

provides helpful vignettes

and a concise command cheat

sheet. SpatialExperiment

in R and scanpy

in python, amongst others, are also frequently used in analyzing spatial

transcriptomics data.

We will use the Load10X_Spatial

function from Seurat to read in the spatial transcriptomics data. These

are the data which you downloaded in the setup section.

First, we will read in the raw data for sample 151673.

R

raw_st <- Load10X_Spatial(data.dir = "./data/151673",

filename = "151673_raw_feature_bc_matrix.h5",

filter.matrix = FALSE)

If you did not see any error messages, then the data loaded in and

you should see an raw_st object in your

Environment tab on the right.

If the data does not load in correctly, verify that the students used the mode = “wb” argument in download.file() during the Setup. We have found that Windows users have to use this.

Let’s look at raw_st, which is a Seurat object.

R

raw_st

OUTPUT

An object of class Seurat

33538 features across 4992 samples within 1 assay

Active assay: Spatial (33538 features, 0 variable features)

1 layer present: counts

1 spatial field of view present: slice1The output says that we have 33,538 features and 4,992 samples with one assay. Feature is a generic term for anything that we measured. In this case, we measured gene expression, so each feature is a gene. Each sample is one spot on the spatial slide. So this tissue sample has 33,538 genes assayed across 4,992 spots.

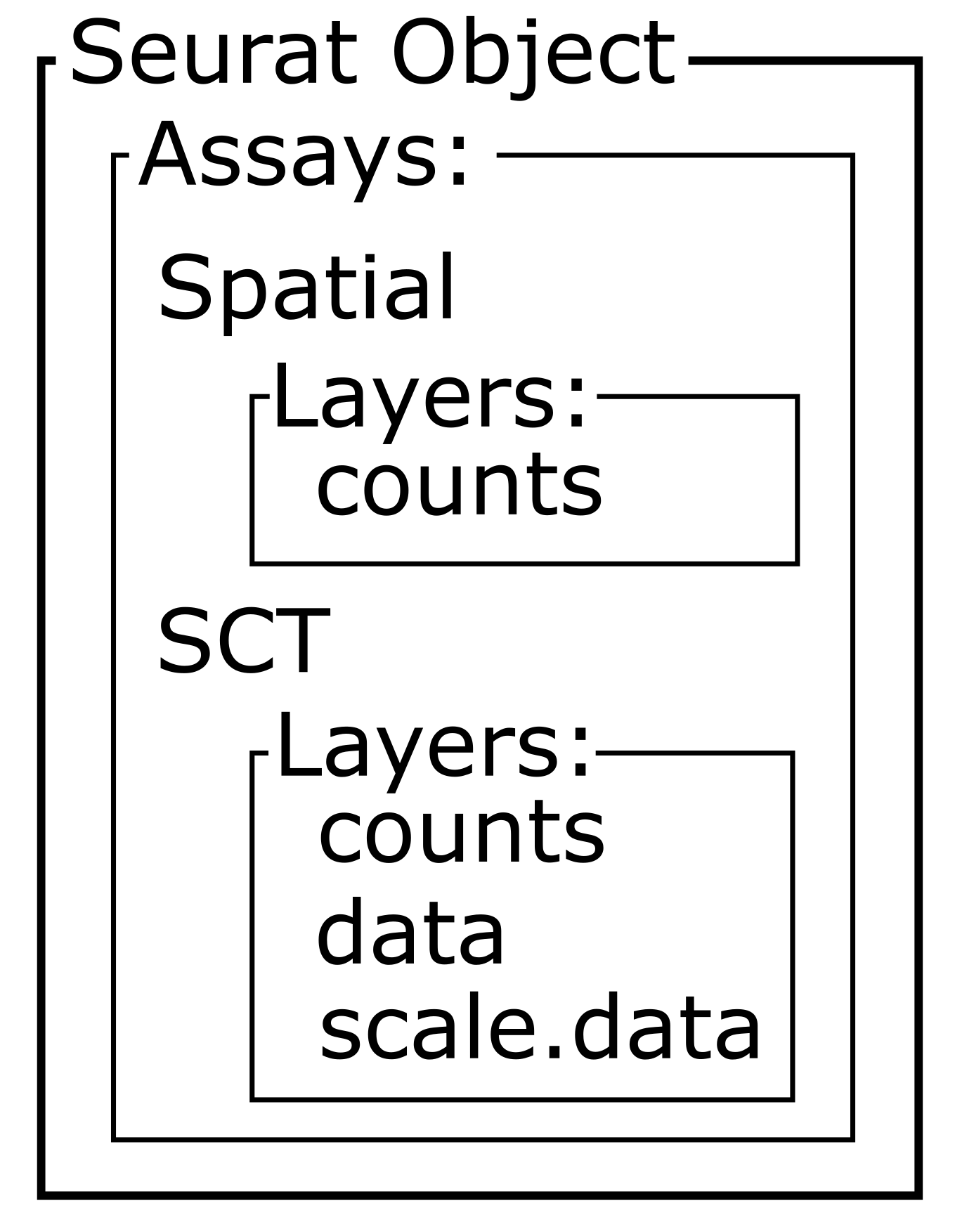

An experiment may have more than one assay. For example, you may run both RNA sequencing and chromatin accessibility in the same set of samples. In this case, we have one assay – RNA-seq. Each assay will, in turn, have one or more layers. Each layer stores a different form of the data. Initially, our Seurat object has a single counts layer, holding the raw, un-normalized RNA-seq counts for each spot. Subsequent downstream analyses can populate other layers, including normalized counts (conventionally stored in a data layer) or variance-stabilized counts (conventionally stored in a scale.data layer).

There is also a single image called slice1 attached to the Seurat object.

Next, we will load in the filtered data. Use the code above and look

in a file browser to identify the filtered file for sample

151673.

Challenge 1: Read in filtered HDF5 file for sample 151673.

Open a file browser and navigate to

Desktop/spatialRNA/data/151673. Can you find an HDF5 file

(with an .h5 suffix) that has the word “filtered” in

it?

If so, read that file in and assign it to a variable called

filter_st.

R

filter_st <- Load10X_Spatial(data.dir = "./data/151673",

filename = "151673_filtered_feature_bc_matrix.h5")

Once you have the filtered data loaded in, look at the object.

R

filter_st

OUTPUT

An object of class Seurat

33538 features across 3639 samples within 1 assay

Active assay: Spatial (33538 features, 0 variable features)

1 layer present: counts

1 spatial field of view present: slice1The raw and filtered data both have the same number of genes (33,538). But the two objects have different numbers of spots. The raw data has 4,992 spots and the filtered data has 3,639 spots.

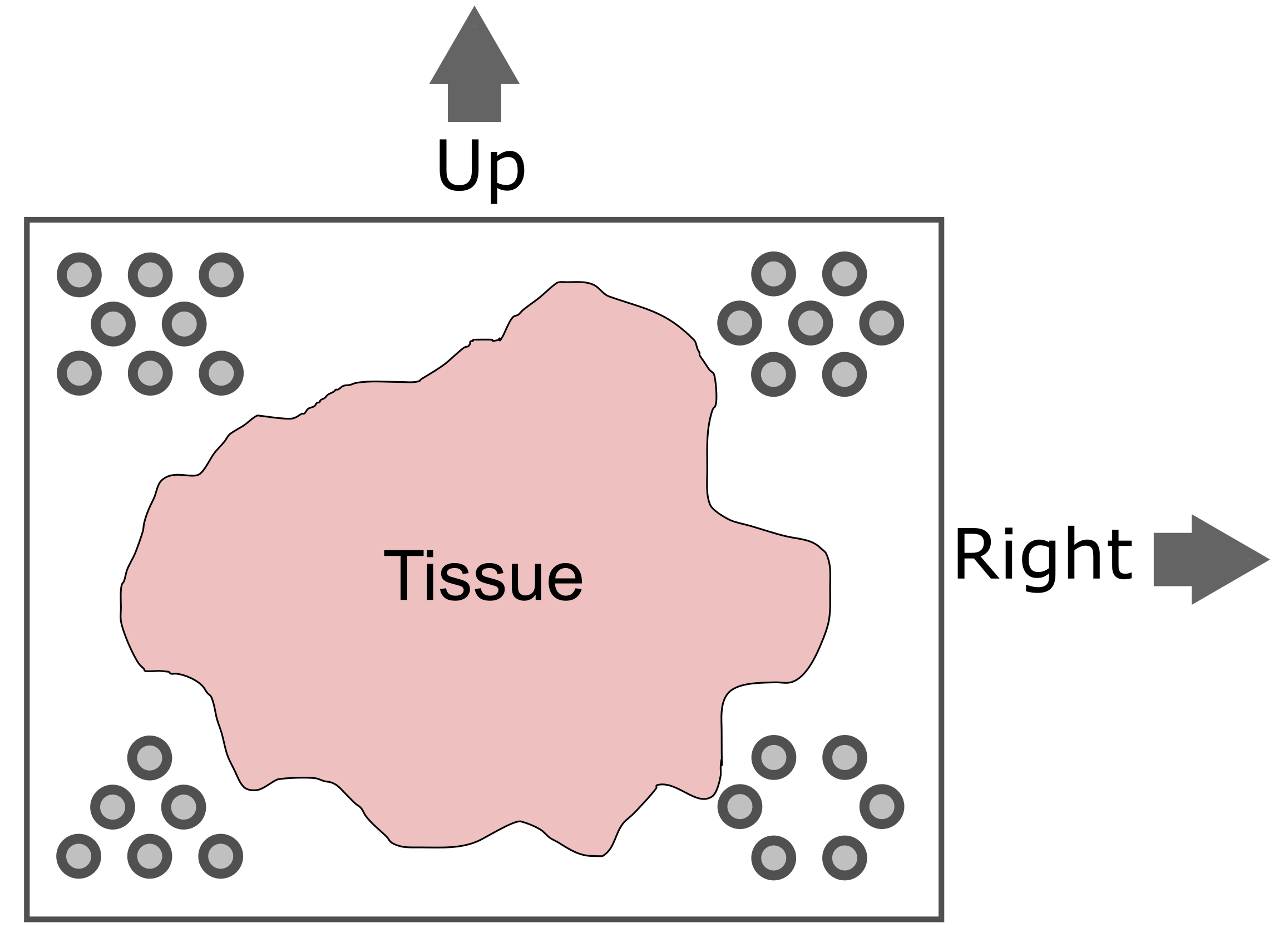

Look at the H&E slide below and notice the grey fiducial spots around the border. These are used by the spatial transcriptomics software to register the H&E image and the spatially-barcoded sequences.

Challenge 2: How does the computer know how to orient the image?

Look carefully at the spots in the H&E image above. Are the spots symmetric? Is there anything different about the spots that might help a computer to assign up/down and left/right to the image?

Look at the spots in each corner. In the upper-left, you will see the following patterns:

The patterns in each corner allow the spatial transcriptomics software to orient the slide.

Add Spot Metadata

The 10x Space Ranger pipeline automatically segments the tissue to

identify it within the background of the slide. This information is

encoded in the “tissue positions” file. Each row in the file corresponds

to a spot. The first column indicates whether the spot was (1) or was

not (0) identified as being within the tissue region by the segmentation

procedure. This file does not contain column names in earlier versions

of Space Ranger, but does starting with version SpaceRanger 2.0.

R

tissue_position <- read_csv("./data/151673/spatial/tissue_positions_list.csv",

col_names = FALSE, show_col_types = FALSE) %>%

column_to_rownames('X1')

colnames(tissue_position) <- c("in_tissue",

"array_row",

"array_col",

"pxl_row_in_fullres",

"pxl_col_in_fullres")

It is important to note that the order of the spots differs between

the Seurat object and the tissue position file. We need to reorder the

tissue positions to match the Seurat object. We can extract the spot

barcodes using the Cells

function. This is named for the earlier versions of Seurat, which

processed single cell transcriptomic data. In this case, we are getting

spot IDs, even though the function is called

Cells.

Next, we will add the tissue positions to the Seurat object’s metadata. Note that Seurat will align the spot barcodes in this process.

R

raw_st <- AddMetaData(object = raw_st, metadata = tissue_position)

filter_st <- AddMetaData(object = filter_st, metadata = tissue_position)

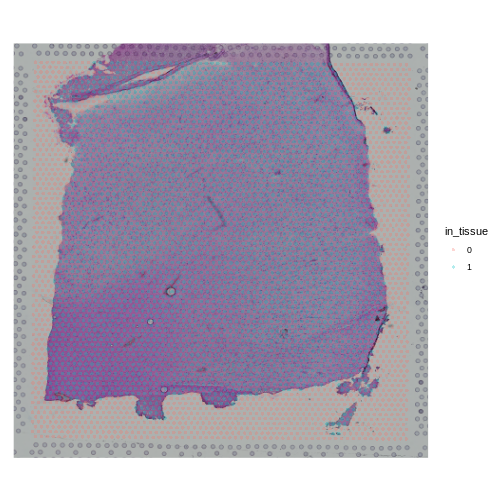

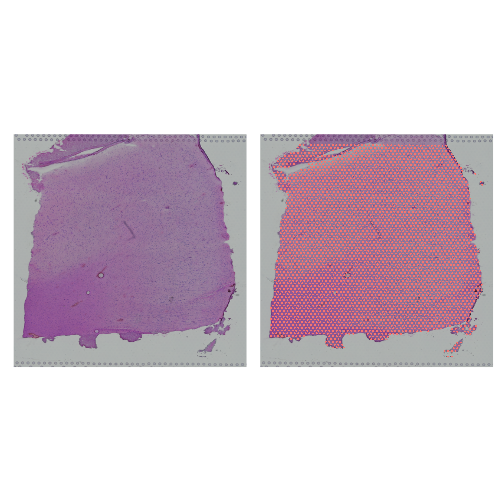

Next, we will plot the spot annotation, indicating spots that are in the tissue in blue and background spots in red.

R

SpatialPlot(raw_st, group.by = "in_tissue", alpha = 0.3)

WARNING

Warning: `aes_string()` was deprecated in ggplot2 3.0.0.

ℹ Please use tidy evaluation idioms with `aes()`.

ℹ See also `vignette("ggplot2-in-packages")` for more information.

ℹ The deprecated feature was likely used in the Seurat package.

Please report the issue at <https://github.com/satijalab/seurat/issues>.

This warning is displayed once every 8 hours.

Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

generated.

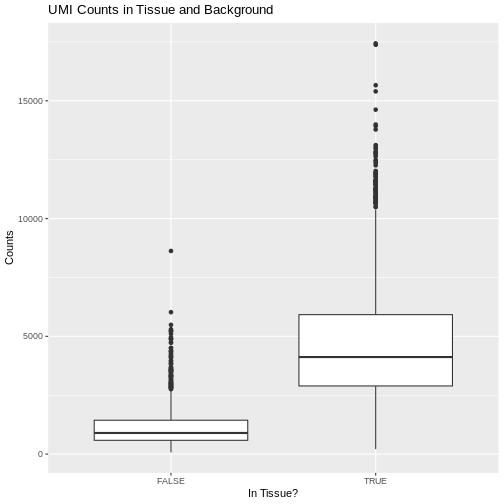

The 10x platform tags each molecule with a Unique Molecular

Identifier (UMI). This allows us to keep only one unique sequencing read

per molecule and to exclude those arising from PCR duplication. We

expect most of the UMI counts to be in the tissue spots. The Seurat

object metadata contains the UMI count in each spot in a column called

nCount_Spatial. Let’s plot the UMI counts in the tissue and

background spots.

R

raw_st@meta.data %>%

ggplot(aes(as.logical(in_tissue), nCount_Spatial)) +

geom_boxplot() +

labs(title = 'UMI Counts in Tissue and Background',

x = 'In Tissue?',

y = 'Counts')

As expected, we see most of the counts in the tissue spots.

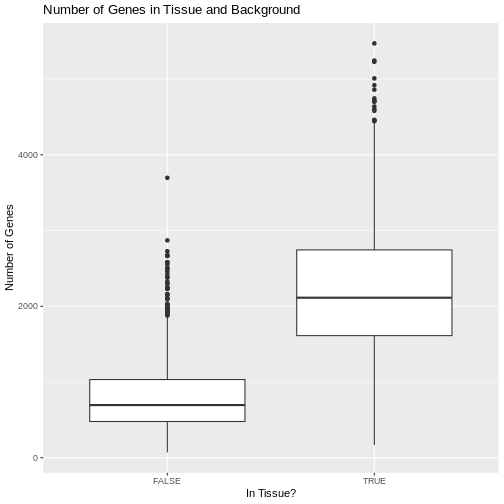

We can also plot the number of genes detected in each spot. Seurat

calls genes features, so we will plot the

nFeature_Spatial value. This is stored in the metadata of

the Seurat object.

R

raw_st@meta.data %>%

ggplot(aes(as.logical(in_tissue), nFeature_Spatial)) +

geom_boxplot() +

labs(title = 'Number of Genes in Tissue and Background',

x = 'In Tissue?',

y = 'Number of Genes')

Challenge 3: Why might there be UMI counts outside of the tissue boundaries?

We expect UMI counts in the spots which overlap with the tissue section. What reasons can you think of that might lead UMI counts to occur in the background spots?

- When the tissue section is lysed, some transcripts may leak out of the cells and into the background region of the slide.

Up to this point, we have been working with the raw, unfiltered data to show you how the spots are filtered. However, in most workflows, you will work directly with the filtered file. From this point forward, we will work with the filtered data object.

Let’s plot the spots in the tissue in the filtered object to verify that it is only using spots in the tissue.

R

plot1 <- SpatialDimPlot(filter_st, alpha = c(0, 0)) +

NoLegend()

plot2 <- SpatialDimPlot(filter_st) +

NoLegend()

plot1 | plot2

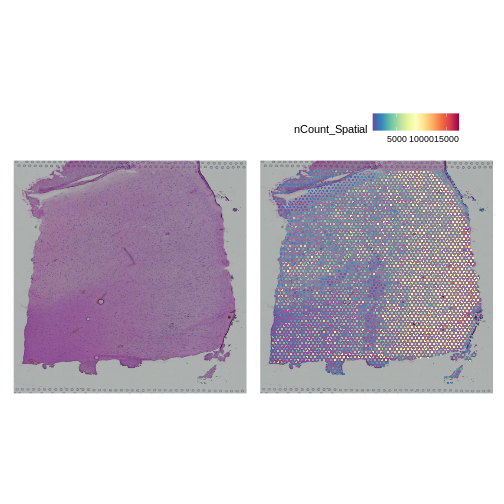

Plot UMI and Gene Counts across Tissue

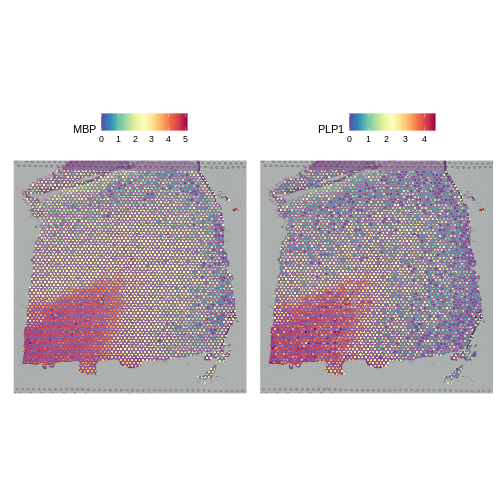

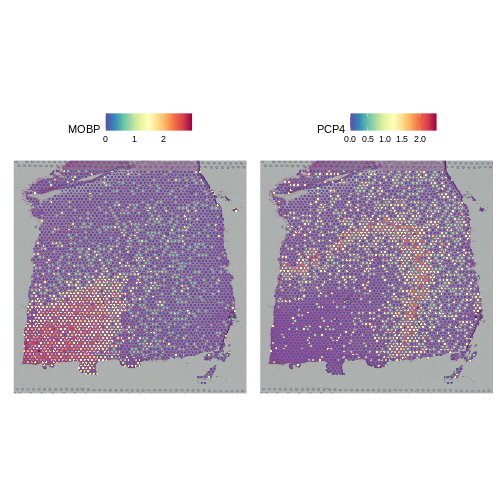

Next, we want to look at the distribution of UMI counts and numbers of genes in each spot across the tissue. This can be helpful in identifying technical issues with the sample processing.

It is useful to first think about what we expect. In the publication associated with this data, the authors show the structure that they expect in this tissue section of the human dorsolateral prefrontal cortex (DLPFC). In the figure below, they show a series of layers, from L1 to L6, arranged from the upper right to the lower left. In the lower left corner, they expect to see White Matter (WM). So we expect to see some series of layers arranged from the upper right to the lower left.

We will use Seurat’s SpatialFeaturePlot

function to look at these values. We can color the spots based on the

spot metadata stored in the Seurat object. You can find these column

names by looking at the meta.data slot of the Seurat

object.

R

head(filter_st@meta.data)

OUTPUT

orig.ident nCount_Spatial nFeature_Spatial in_tissue

AAACAAGTATCTCCCA-1 SeuratProject 8458 3586 1

AAACAATCTACTAGCA-1 SeuratProject 1667 1150 1

AAACACCAATAACTGC-1 SeuratProject 3769 1960 1

AAACAGAGCGACTCCT-1 SeuratProject 5433 2424 1

AAACAGCTTTCAGAAG-1 SeuratProject 4278 2264 1

AAACAGGGTCTATATT-1 SeuratProject 4004 2178 1

array_row array_col pxl_row_in_fullres pxl_col_in_fullres

AAACAAGTATCTCCCA-1 50 102 8468 9791

AAACAATCTACTAGCA-1 3 43 2807 5769

AAACACCAATAACTGC-1 59 19 9505 4068

AAACAGAGCGACTCCT-1 14 94 4151 9271

AAACAGCTTTCAGAAG-1 43 9 7583 3393

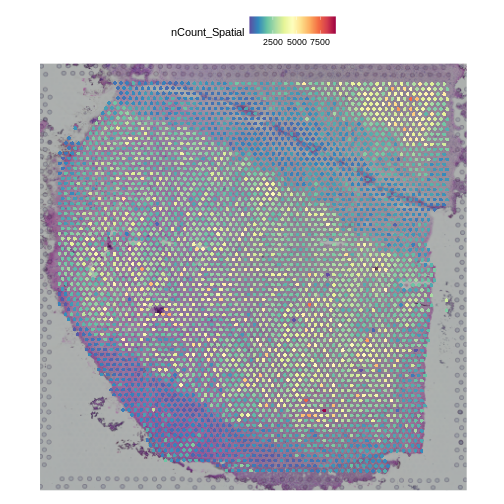

AAACAGGGTCTATATT-1 47 13 8064 3665To plot the UMI counts, we will use the nCount_Spatial

column in the spot metadata.

R

plot1 <- SpatialDimPlot(filter_st, alpha = c(0, 0)) +

NoLegend()

plot2 <- SpatialFeaturePlot(filter_st, features = "nCount_Spatial")

plot1 | plot2

In this case, we see a band of higher counts running from upper left to lower right. There are also bands of lower counts above and below this band. The band in the upper right corner may be due to the fissure in the tissue. It is less clear why the expression is low in the lower-left corner.

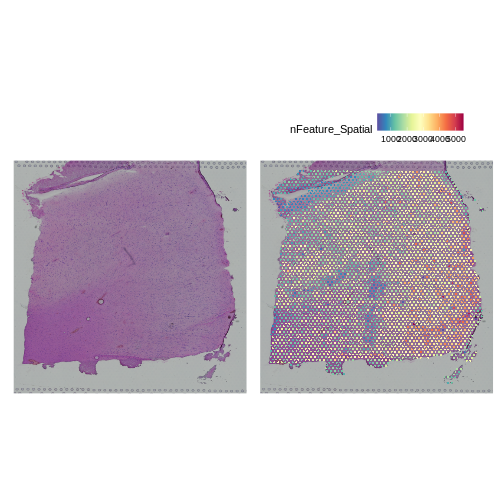

We can also look at the number of genes detected in each spot using

nFeature_Spatial.

R

plot1 <- SpatialDimPlot(filter_st, alpha = c(0, 0)) +

NoLegend()

plot2 <- SpatialFeaturePlot(filter_st, features = "nFeature_Spatial")

plot1 | plot2

It is difficult to lay out a broad set of rules that will work for all types of tissues and samples. Some tissues may have homogeneous UMI counts across the section, while others may show variation in UMI counts due to tissue structure. For example, in cancer tissue sections, stromal cells tend to have lower counts than tumor cells and this should be evident in a UMI count plot. In the brain sample below, we might expect some variation in UMI counts in different layers of the brain.

Removing Genes with Low Expression

In order to build this lesson, we needed to reduce the size of the data. To do this, we are going to filter out genes that have no expression across the cells.

How many genes to we have before filtering?

R

paste(nrow(filter_st), "genes.")

OUTPUT

[1] "33538 genes."Next, we will get the raw counts, calculate the sum of each gene’s exprsssion across all spots, and filter the Seurat object to retain genes with summed counts greater than zero.

R

counts <- LayerData(filter_st, 'counts')

gene_sums <- rowSums(counts)

keep_genes <- which(gene_sums > 0)

filter_st <- filter_st[keep_genes,]

WARNING

Warning: Not validating Centroids objects

Not validating Centroids objectsWARNING

Warning: Not validating FOV objects

Not validating FOV objects

Not validating FOV objects

Not validating FOV objects

Not validating FOV objects

Not validating FOV objectsWARNING

Warning: Not validating Seurat objectsHow many genes to we have after filtering?

R

paste(nrow(filter_st), "genes.")

OUTPUT

[1] "21842 genes."So we removed about 11,700 genes that had zero counts.

Conclusion

- The 10x Space Ranger pipeline provides you with an unfiltered and a filtered data file.

- The HDF5 file ends with an

h5extension and contains the barcodes, features (genes), and counts matrix. - Seurat is one of several popular environments for analyzing spatial transcriptomics data.

- It is important to know something about the structure of the tissue which you are analyzing.

- Plotting total counts and genes in each spot may help to identify quality control issues.

Content from Remove Low-quality Spots

Last updated on 2025-10-07 | Edit this page

Estimated time: 75 minutes

Overview

Questions

- How do I remove low-quality spots?

- What kinds of problems produce low-quality spots?

- What happens if I skip quality control and proceed with analysis?

Objectives

- Understand how to look for low quality spots.

- Decide whether to retain or remove low quality spots.

Introduction

Spatial transcriptomics involves a complex process that may involve some technical failures. If the processing of the entire slide fails, it should be obvious due to a large number of gene appearing in spots outside of the tissue or low UMIs across the whole tissue.

However, there can also be variation in spot quality in a slide that has largely high-quality spots. These artifacts are much rarer than in single-cell transcriptomics because the process of tissue sectioning is less disruptive than tissue dissociation. Because of this, we recommend light spot filtering.

There are three metrics that we will use to identify and remove low-quality spots:

- Mitochondrial gene expression,

- Total UMI counts,

- Number of detected genes.

During tissue processing, it is possible that some cells will be lysed, spilling out the transcripts, but retaining the mitochondria. These spots will appear with much higher mitochondrial gene expression. High UMI counts or number of detected genes might also indicate spots with bleed over of lysed content from neighboring cells.

There may be regions of the slide with artifacts introduced during slide preparation. These include tearing and folding of the tissue. As mentioned above, the 10x Space Ranger pipeline automatically segments the tissue boundary. This generally performs well at a large scale. However, at high resolution, it may fail to properly assign spots within small tears or along the jagged edge to the background. Such spots might be identified by low UMI counts or number of detected genes. Conversely, folded tissue may have a higher density of cells, which could result in high UMI counts or number of detected genes. Pathologist annotation of H&E image can flag artifactual regions that are then excluded from downstream analysis. Image processing techniques may also be able to automatically identify and exclude artifactual regions, particularly folds. Using pathologist annotation or image processing to identify tissue abnormalities is less common than using the simple data-driven metrics considered here, and we do not discuss them further.

These metrics may be tissue-dependent. In some tissues, there may be biological reasons for differential expression across the tissue. For example, in a cancer sample, mitochondrial or total gene expression may vary between stromal and tumor regions. It will be important for you to familiarize yourself with the structure of the tissue that you are analyzing in order to make rational judgments about filtering.

Filtering by Mitochondrial Gene Count

In single-cell RNA sequencing experiments, the tissue is digested and the cells are dissociated. This mechanical disruption is stressful to the cells and some of them are damaged in the process. Elevated levels of mitochondrial genes often indicate cell death or damage because, when a cell’s membrane is compromised, it loses most cytoplasmic content while retaining mitochondrial RNA. Therefore, spots with high mitochondrial RNA may represent damaged or dying cells, and their exclusion helps focus the analysis on healthy, intact cells.

More details on this relationship can be found in the literature on mitochondrial DNA and cell death.

However, in spatial transcriptomics, the tissue is either frozen or formalin-fixed and there is much less mechanical disruption of the tissue. Because of this, we are skeptical of the value of filtering spots based on mitochondrial gene counts.

For completeness, we show how to obtain the mitochondrial genes, calculate the percentage of counts produced by these genes in each spot, and add this to the Seurat object metadata.

We will search the gene symbols in the feature metadata to identify mitochondrial genes. We do not need to find all genes in these categories, so we will search for genes with symbols that start with “MT”.

The Seurat object is designed to be flexible and may contains several data types. For example, it may contain both gene counts and open chromatin peaks. In this analysis, the Seurat object only contains gene counts. The different types of data are called “Layers” in Seurat and may be accessed using the Layers function.

R

Layers(filter_st)

OUTPUT

[1] "counts"This tells us that the “filter_st” object only contains one data Layer called “counts”. We can access this using the LayerData function using “counts” as an argument.

R

counts <- LayerData(filter_st, 'counts')

head(counts)[,1:5]

OUTPUT

6 x 5 sparse Matrix of class "dgCMatrix"

AAACAAGTATCTCCCA-1 AAACAATCTACTAGCA-1 AAACACCAATAACTGC-1

MIR1302-2HG . . .

AL627309.1 . . .

AL669831.5 . . .

FAM87B . . .

LINC00115 . . .

FAM41C . . .

AAACAGAGCGACTCCT-1 AAACAGCTTTCAGAAG-1

MIR1302-2HG . .

AL627309.1 . .

AL669831.5 . .

FAM87B . .

LINC00115 . .

FAM41C . .The output above may look odd to you since there are no numbers. Notice that the text above the table says “sparse Matrix”. Many of the counts in the file are likely to be zero. Due to the manner in which numbers are stored in computer memory, a zero takes up as much space as a number. If we had to store all of these zeros, it would consume a lot of computer memory. A sparse matrix is a special data structure which only stores the non-zero values. In the table above, each dot (.) represents a position with zero counts.

You don’t have to have the students type out the next block. It may be better to let them focus on the concept rather than typing.

If we look at another part of the “counts” matrix, we can see numbers.

R

counts[20000:20005,1:5]

OUTPUT

6 x 5 sparse Matrix of class "dgCMatrix"

AAACAAGTATCTCCCA-1 AAACAATCTACTAGCA-1 AAACACCAATAACTGC-1

DNAJB1 . . .

TECR 3 1 .

NDUFB7 3 1 1

ZNF333 . . .

ADGRE2 . . .

OR7C1 . . .

AAACAGAGCGACTCCT-1 AAACAGCTTTCAGAAG-1

DNAJB1 1 .

TECR 2 1

NDUFB7 2 4

ZNF333 . .

ADGRE2 . .

OR7C1 . .As you can see in the table above, the gene symbols are stored in the rownames of “counts”. We will find find mitochondrial genes by searching for gene symbols which start with “MT”.

R

mito_pattern <- '^[Mm][Tt]-'

mito_genes <- rownames(counts)[grep(mito_pattern, rownames(counts))]

mito_genes

OUTPUT

[1] "MT-ND1" "MT-ND2" "MT-CO1" "MT-CO2" "MT-ATP8" "MT-ATP6" "MT-CO3"

[8] "MT-ND3" "MT-ND4L" "MT-ND4" "MT-ND5" "MT-ND6" "MT-CYB" We now have a set of mitochondrial genes. We will use these genes to estimate the percentage of gene counts expressed by mitochondrial genes in each cell and add this to the Seurat object. We will pass the mitochondrial gene symbols into PercentageFeatureSet, which will perform the calculation for us.

R

filter_st[["percent.mt"]] <- PercentageFeatureSet(filter_st, pattern = mito_pattern)

This syntax adds a new column called “percent.mt” to the spot metadata.

R

colnames(filter_st@meta.data)

OUTPUT

[1] "orig.ident" "nCount_Spatial" "nFeature_Spatial"

[4] "in_tissue" "array_row" "array_col"

[7] "pxl_row_in_fullres" "pxl_col_in_fullres" "percent.mt" There is no need to have students type out the figure titles and axis labels.

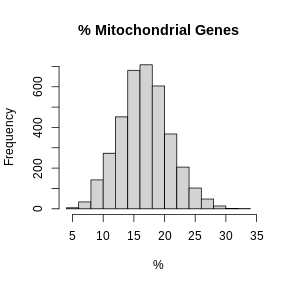

Let’s look at histograms of the ribosomal and mitochondrial gene percentages.

R

hist(FetchData(filter_st, "percent.mt")[,1], main = "% Mitochondrial Genes",

xlab = "%")

In these plots, we are looking for spots which are outside of a normal distribution. It is difficult to generalize how to select a filtering threshold. Some tissue or cell types may have higher mitochondrial gene expression. Further, heterogeneous tissues may have subsets of cells with differing levels of mitochondrial gene expression.

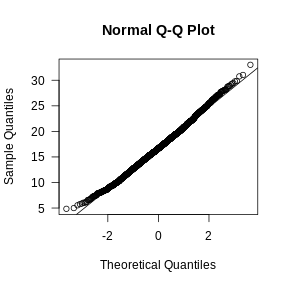

Let’s visually check whether the mitochondrial gene expression is normally distributed.

R

mito_expr <- FetchData(filter_st, "percent.mt")[,1]

qqnorm(mito_expr, las = 1)

qqline(mito_expr)

In this case, there may be a reason to filter out spots with greater than 35% mitochondrial counts.

Filter by UMI Count and Number of Detected Genes

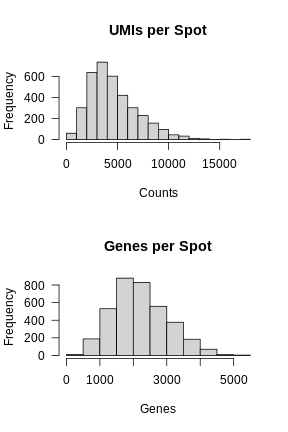

In the previous lesson, we plotted number of UMIs and genes detected spatially across the tissue. Let’s plot these values again, but this time as a histogram.

There is no need to have students type out the figure titles and axis labels.

R

layout(matrix(1:2, ncol = 1))

hist(FetchData(filter_st, "nCount_Spatial")[,1],

main = 'UMIs per Spot', xlab = 'Counts', las = 1)

hist(FetchData(filter_st, "nFeature_Spatial")[,1],

main = 'Genes per Spot', xlab = 'Genes', las = 1)

Again, most of the spots fall within a reasonable distribution. The right tail of the distribution is not very thick. We might filter spots with over 14,000 UMIs or 3,000 genes. We will use these thresholds to add a “keep” column to the Seurat object metadata.

First, we will create variables for each threshold. While we could type the numbers directly into the logical comparison statements, creating variables makes it clear what each number represents.

R

mito_thr <- 32

counts_thr <- 14000

features_thr <- 5000

Next, we will create a “keep” variable which will be TRUE for spots that we want to keep.

R

keep <- FetchData(filter_st, "percent.mt")[,1] < mito_thr

keep <- FetchData(filter_st, "nCount_Spatial")[,1] < counts_thr & keep

keep <- FetchData(filter_st, "nFeature_Spatial")[,1] < features_thr & keep

filter_st$keep <- keep

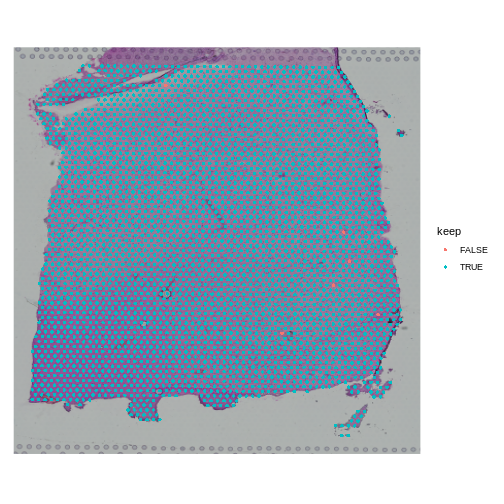

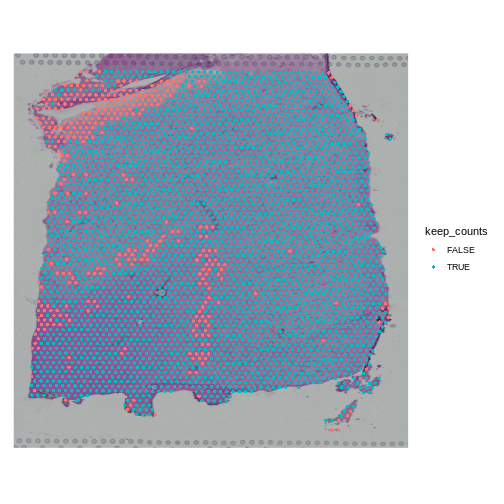

Now let’s plot the spots on the tissue and color them based on whether we will keep them.

R

SpatialDimPlot(filter_st, group.by = "keep")

WARNING

Warning: `aes_string()` was deprecated in ggplot2 3.0.0.

ℹ Please use tidy evaluation idioms with `aes()`.

ℹ See also `vignette("ggplot2-in-packages")` for more information.

ℹ The deprecated feature was likely used in the Seurat package.

Please report the issue at <https://github.com/satijalab/seurat/issues>.

This warning is displayed once every 8 hours.

Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

generated.

When you examine the spots that have been flagged, it is important to look for patterns. If a contiguous section of tissue contains spots that will be removed, it is worth looking at the histology slide to see if there are structures that correlate with the removed spots. If it is a section of necrotic tissue, then, depending on your experimental question, you may want to remove those spots. But you should always look for patterns in the removed spots and convince yourself that they are not biasing your results.

Note that we will only remove a few spots in this filtering step.

R

table(FetchData(filter_st, "keep")[,1])

OUTPUT

FALSE TRUE

6 3633 We can remove the spots directly using the following syntax. In this case, the “columns” of the Seurat object correspond to the spots.

R

filter_st <- filter_st[,keep]

Challenge 1: Change the total counts spot filtering threshold.

- Make a copy of the seurat object.

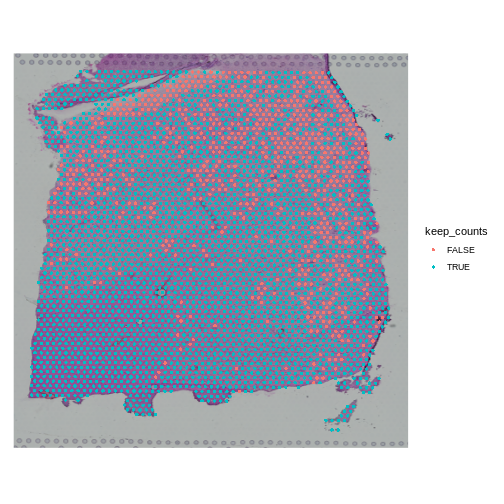

- Change the threshold for the number of UMIs per spot to keep spots with more than 2000 counts. Note that we are filtering on the lower side of the distribution.

- Add new variable called “keep_counts” to the Seurat object.

- Plot the spot overlaid on the tissue section, colored by whether you are keeping them.

Is there a pattern to the removed spots that seems to correlate with the tissue structure?

R

obj <- filter_st

new_counts_thr <- 2000

keep_counts <- FetchData(obj, "nCount_Spatial")[,1] > new_counts_thr

obj$keep_counts <- keep_counts

SpatialDimPlot(obj, group.by = "keep_counts")

Note that the spots that we have flagged seem to correspond to stripes in the tissue section. These may be regions of the brain which have lower levels of gene expression, so we may want to revise or remove this threshold. Overall, this exercise shows that it is important to use judgement when filtering spots.

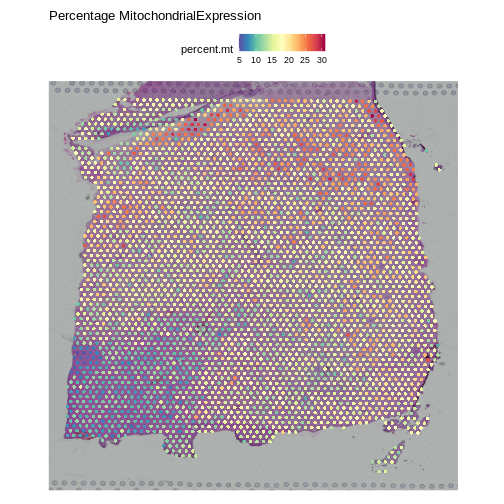

Challenge 2: Change the mitochondrial spot filtering thresholds.

- Make a copy of the seurat object.

- Change the threshold for the percent mitochondrial expression per spot to keep spots with less than 25% mitochondrial expression. This might happen if you decide that too much mitochondrial expression indicates some technical error.

- Add new variable called “keep_counts” to the Seurat object.

- Plot the spot overlaid on the tissue section, colored by whether you are keeping them.

Is there a pattern to the removed spots that seems to correlate with the tissue structure?

R

obj <- filter_st

new_mito_thr <- 20

keep_counts <- FetchData(obj, "percent.mt")[,1] < new_mito_thr

obj$keep_counts <- keep_counts

SpatialDimPlot(obj, group.by = "keep_counts")

Note that the spots that we have flagged are largely outside of the lower left. Later in the lesson, we will find that this is the “white matter”, and this region has different expression from the rest of the tissue section. In fact, mitochondrial expression in general seems to be higher in the upper right area.

R

SpatialFeaturePlot(obj, features = "percent.mt") +

labs(title = "Percentage MitochondrialExpression")

R

rm(obj)

- Spot filtering should be light.

- Inspect the spots that you are filtering to confirm that you are not discarding important tissue structures.

Content from Normalization in Spatial Transcriptomics

Last updated on 2025-10-07 | Edit this page

Estimated time: 70 minutes

Overview

Questions

- What technical and biological factors impact spatial transcriptomics data?

- How do these factors motivate the need for normalization?

- What are popular normalization methods?

- How do we assess the impact of normalization?

Objectives

- Understand and learn to apply popular normalization techniques, such as CPM normalization, log normalization, and SCTransform.

- Diagnose the impact of normalization.

Understanding Normalization in Spatial Transcriptomics

As with scRNA-seq data, normalization is necessary to overcome two technical artifacts in spatial transcriptomics:

- the difference in total counts across spots, and

- the dependence of a gene’s expression variance on its expression level.

The number of total counts in a spot is termed its library size. Since library sizes differ across spots, it will be difficult to compare gene expression values between them in a meaningful way because the denominator (total spot counts) is different in each spot. On the other hand, different spots may contain different types of cells, which may express differing numbers of transcripts. So there is a balance between normalizing all spots to have the same total counts and leaving some variation in total counts which may be due to the biology of the tissue. In general, we want to be cautious that removing the above technical artifacts may also obscure true biological differences.

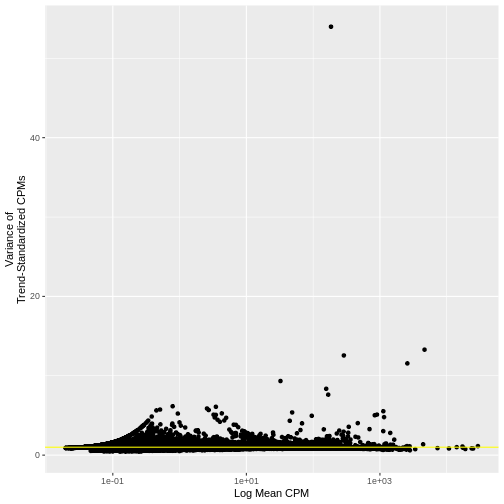

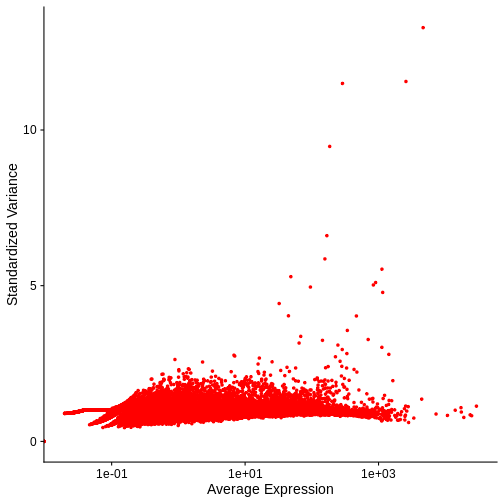

Regarding the second artifact, we will see below that the variance in a gene’s expression scales with its expression. If we do not correct for this effect, differentially expressed genes will be skewed towards the high end of the expression spectrum. Hence, we seek to stabilize the variance – i.e., transform the data such that expression variance is independent of mean expression.

In this lesson we will:

- Observe that total spots per spot are variable

- Explore biological factors that contribute to that variability

- See that gene expression variance is correlated with mean expression

- Apply three methods aimed at mitigating one or both of these technical observations: counts per million (CPM) normalization, log normalization, and Seurat’s SCTransform

Total Counts per Spot are Variable

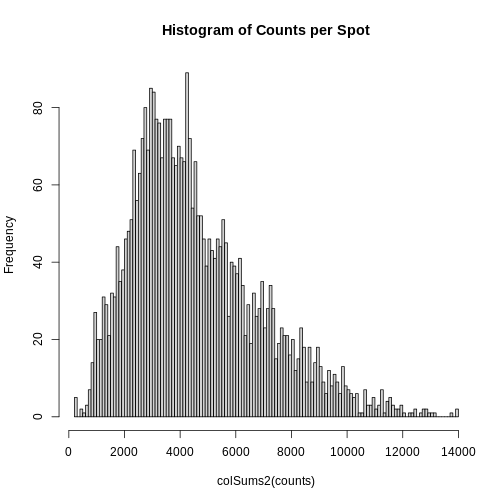

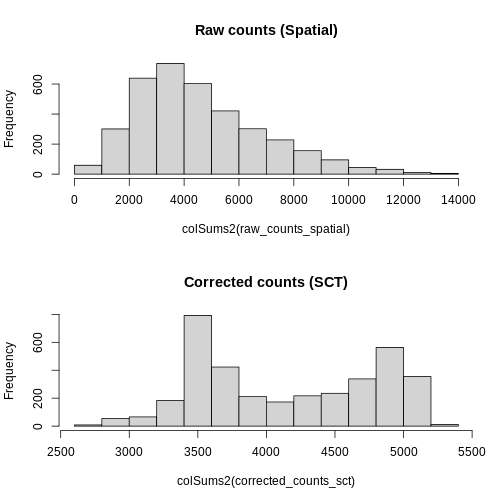

Let’s first assess the variability in the total counts per spot.

The spots are arranged in columns in the data matrix. We will look at the distribution of total counts per spot by summing the counts in each column and making a histogram.

R

# Extract the raw counts (gene by spot matrix) from the Seurat object

counts <- LayerData(filter_st, layer = 'counts')

# Plot a histogram of the total counts (library size or sum across genes).

# Note that this column sum is also encoded in the nCount_Spatial metadata

# variable. We could have simply made a histogram of that variable.

hist(colSums2(counts), breaks = 100,

main = "Histogram of Counts per Spot")

As you can see, the total counts per spot ranges cross four orders of magnitude. Some of this may be due to the biology of the tissue, i.e. some cells may express more transcripts. But some of this may be due to technical issues. Let’s explore each of these two considerations further.

Sources of Biological Variation in Total Counts

Hematoxylin and Eosin (H&E) staining is routinely performed on tissue sections in the clinic for diagnosis. Hematoxylin stains nuclei purple or blue and eosin stains cytoplasm and extracellular matrix pink. Collectively, they elucidate tissue and cellular morphology, which can guide interpretation of transcriptomic data. For example, observing high RNA counts in a necrotic region, which should instead have fewer cells, might suggest technical artifacts and, thus, indicate a need for normalization.

Maynard and colleagues used the information encoded in the H&E, in particular cellular organization, morphology, and density, in conjunction with expression data to annotate the six layers and the white matter of the neocortex. Additionally, they applied standard image processing techniques to the H&E image to segment and count nuclei under each spot. They provide this as metadata. Let’s load that layer annotation and cell count metadata and add it to our Seurat object.

R

# Load the metadata provided by Maynard et al.

spot_metadata <- read.table("./data/spot-meta.tsv", sep="\t")

# Subset the metadata (across all samples) to our sample

spot_metadata <- subset(spot_metadata, sample_name == 151673)

# Format the metadata by setting rowname to the barcode (id) of each spot,

# by ensuring that each spot in our data is represented in the metadata,

# and by ordering the spots within the metadata consistently with the data.

rownames(spot_metadata) <- spot_metadata$barcode

stopifnot(all(Cells(filter_st) %in% rownames(spot_metadata)))

spot_metadata <- spot_metadata[Cells(filter_st),]

# Add the layer annotation (layer_guess) and cell count as

# metadata to the Seurat object using AddMetaData.

filter_st <- AddMetaData(object = filter_st,

metadata = spot_metadata[, c("layer_guess", "cell_count"), drop=FALSE])

Now, we can plot the layer annotations to understand the structure of

the tissue. We will use a simple wrapper,

SpatialDimPlotColorSafe, around the Seurat function

SpatialDimPlot. This is defined in

code/spatial_utils.R and uses a color-blind safe

palette.

R

# Plot the layer annotations on the tissue, omitting any spots

# that do not have annotations (*i.e.*, having NA values)

SpatialDimPlotColorSafe(filter_st[, !is.na(filter_st[[]]$layer_guess)],

"layer_guess") + labs(fill="Layer")

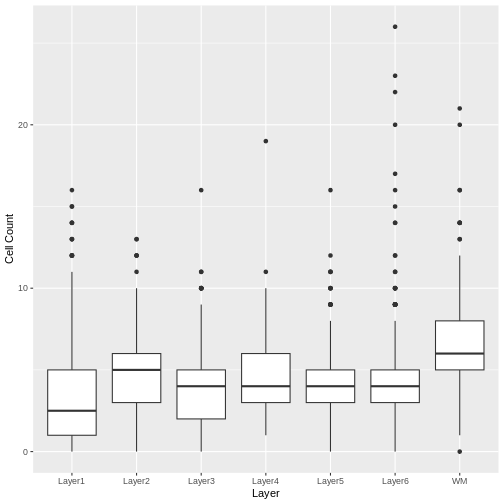

We noted that the authors used cellular density to aid in discerning layers. Let’s see how those H&E-derived cell counts vary across layers.

R

# Make a boxplot of spot-level cell counts, faceted by layer annotation.

# As above, remove any spots without annotations (*i.e.*, having NA values).

g <- ggplot(na.omit(filter_st[[]][, c("layer_guess", "cell_count")]),

aes(x = layer_guess, y = cell_count))

g <- g + geom_boxplot() + xlab("Layer") + ylab("Cell Count")

g

We see that the white matter (WM) has increased cells per spot, whereas Layer 1 has fewer cells per spot.

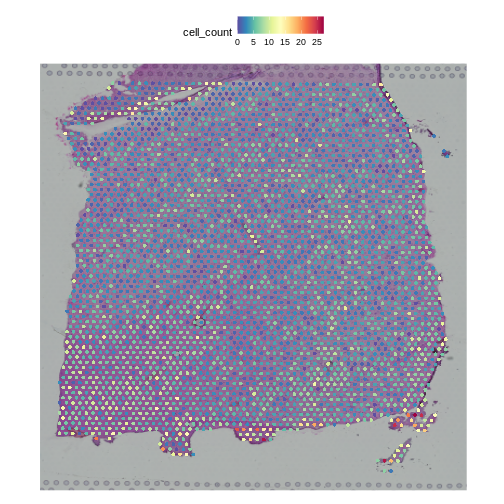

We can also plot these cell counts spatially.

R

SpatialFeaturePlot(filter_st, "cell_count")

The cell counts partially reflect the banding of the layers.

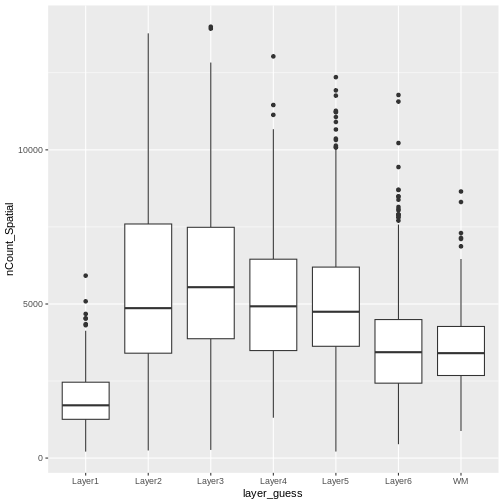

As a potential surrogate for cell count, let’s plot the total counts (number of UMIs or library size) per spot as a function of layer.

R

# Make a boxplot of spot-level total read counts (library size), faceted by layer annotation.

# Remove any spots without annotations (*i.e.*, having NA values).

g <- ggplot(na.omit(filter_st[[]][, c("layer_guess", "nCount_Spatial")]),

aes(x = layer_guess, y = nCount_Spatial))

g <- g + geom_boxplot()

g

Layer 1 has fewer total read counts, consistent with its lower cell count. An increase in total read counts consistent with that in cell count is not observed for the white matter, however. Regardless, there are clear differences in total read counts across brain layers.

In summary, we have observed that both total read counts (library size) and feature counts (number of detected genes) can encode biological information. As such, we strongly recommend visualizing raw gene and features counts prior to normalization, which would remove differences in library size across spots.

Normalization Techniques to Mitigate Sources of Technical Variation in Total Counts

“Counts Per Million” Library Size Normalization

The first technical issue we noted above was a difference in total counts or library size across spots. A straightfoward means of addressing this is simply to divide all gene counts within the spot by the total counts in that spot. Conventionally, we then multiply by a million, which yields “counts per million” (CPM). Adopting this particular factor consistently establishes a standard scale across studies.

You should be aware, however, that the CPM approach is susceptible to “compositional bias” – if a small number of genes make a large contribution to the total count, any significant fluctuation in their expression across samples will impact the quantification of all other genes. To overcome this, more robust measures of library size that are more resilient to compositional bias are sometimes used, including the 75th percentile of counts within a sample (or here, spot). For simplicity, here we will use CPM.

In Seurat, we can apply this transformation via the

NormalizeData function, parameterized by the relative

counts (or “RC”) normalization method. We scale the results to a million

cells through the scale.factor parameter.

R

# Apply CPM normalization using NormalizeData, by specifying the relative

# counts (RC) normalization.method and a scale factor of one million.

cpm_st <- NormalizeData(filter_st,

assay = "Spatial",

normalization.method = "RC",

scale.factor = 1e6)

NormalizeData adds a data object to the

Seurat object.

R

# Access the layers of the Seurat object

Layers(cpm_st)

OUTPUT

[1] "counts" "data" We can confirm that we have indeed normalized away differences in total counts – all spots now have one million reads:

R

# Examine the total counts in each spot, as the sum of the columns.

# As above, we could have also used nCount_Spatial in the metadata.

head(colSums(LayerData(cpm_st, "data")))

OUTPUT

AAACAAGTATCTCCCA-1 AAACAATCTACTAGCA-1 AAACACCAATAACTGC-1 AAACAGAGCGACTCCT-1

1e+06 1e+06 1e+06 1e+06

AAACAGCTTTCAGAAG-1 AAACAGGGTCTATATT-1

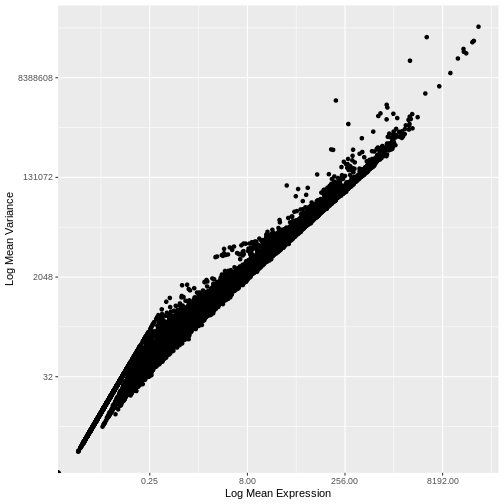

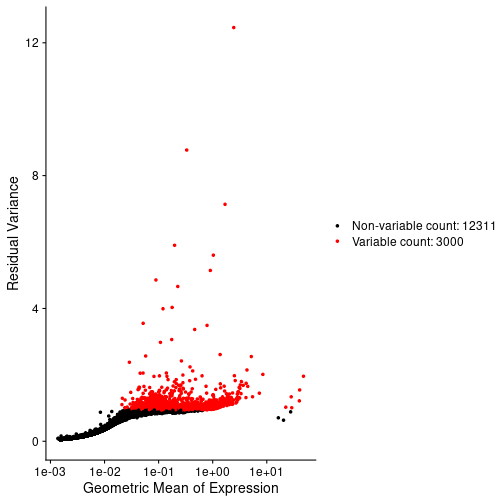

1e+06 1e+06 Our second concern was that variance might differ across genes in an expression dependent manner. To diagnose this, we will make a so-called mean-variance plot, with each gene’s mean expression across spots on the x axis and its variance across spots on the y axis. This shows any potential trends between each gene’s mean expression and the variance of that expression.

R

# Extract the CPM data computed above

cpms <- LayerData(cpm_st, "data")

# Calculate the mean and variance of the CPMs

means <- apply(cpms, 1, mean)

vars <- apply(cpms, 1, var)

# Assemble the mean and variance into a data.frame

gene.info <- data.frame(mean = means, variance = vars)

# Plot the mean expression on the x axis and the variance in expression on

# the y axis

g <- ggplot() + geom_point(data = gene.info, aes(x = mean, y = variance))

# Log transform both axes

g <- g + scale_x_continuous(trans='log2') + scale_y_continuous(trans='log2')

g <- g + xlab("Log Mean Expression") + ylab("Log Mean Variance")

g

WARNING

Warning in scale_x_continuous(trans = "log2"): log-2 transformation introduced

infinite values.WARNING

Warning in scale_y_continuous(trans = "log2"): log-2 transformation introduced

infinite values.

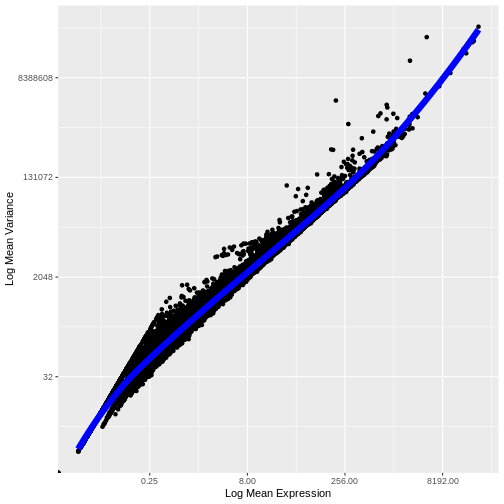

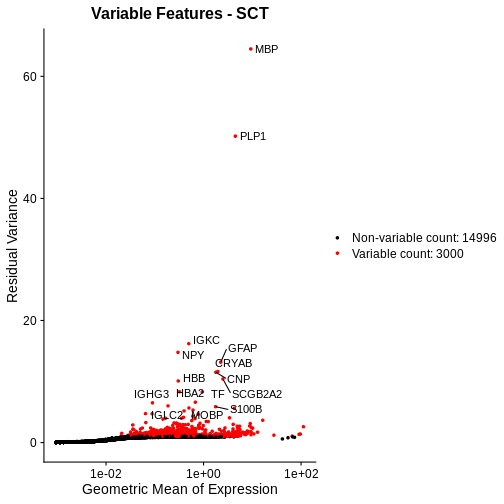

There is a clear relationship between the mean and variance of gene expression. Our goal was instead that the variance be independent of the mean. One way of achieving this is to detrend the data by fitting a smooth curve to the mean-variance plot. This fit will capture the general behavior of most genes. And, since we expect most genes to exhibit technical variability only and not biological variability additionally, this trend will reflect technical variance. Let’s start by characterizing the trend in the data by fitting them with a smooth line. We will use LOESS (locally estimated scatterplot smoothing) regression, an approach originally developed to fit a smooth line through data points in a scatterplot.

R

# This is the default LOESS span used by Seurat in FindVariableFeatures

loess.span <- 0.3

# Exclude genes with constant variance from our fit.

not.const <- gene.info$variance > 0

# Fit a LOESS trend line relating the (log10) gene expression variance

# to the (log10) gene expression mean, but only for the non-constant

# variance genes.

fit <- loess(formula = log10(x = variance) ~ log10(x = mean),

data = gene.info[not.const, ], span = loess.span)

Let’s now plot the fitted/expected variances as a function of the observed means.

R

# The expected variance computed from the model are in fit$fitted.

# Exponentiate because the original model was fit to log10-transformed means and variances.

gene.info$variance.expected <- NA

gene.info[not.const, "variance.expected"] <- 10^fit$fitted

# Plot the expected variance as a function of the observed means for only

# the non-constant variance genes.

g <- g + geom_line(data = na.omit(gene.info[not.const,]),

aes(x = mean, y = variance.expected), linewidth = 3, color = "blue")

g

WARNING

Warning in scale_x_continuous(trans = "log2"): log-2 transformation introduced

infinite values.WARNING

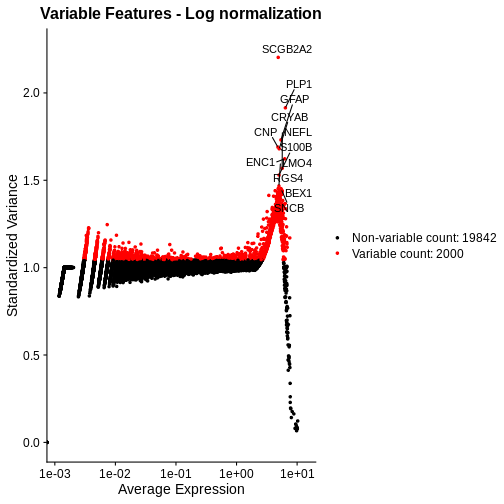

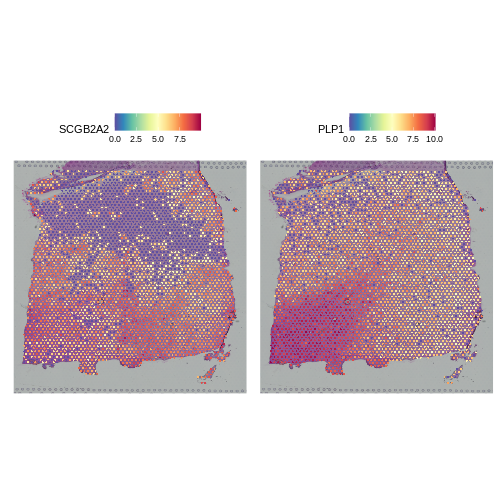

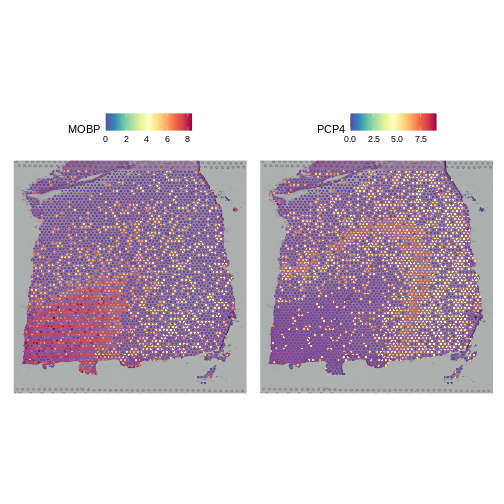

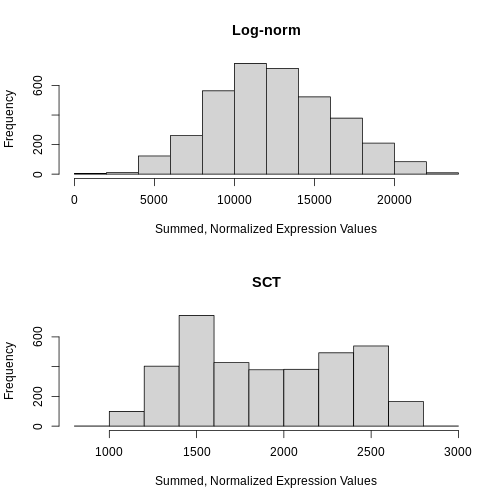

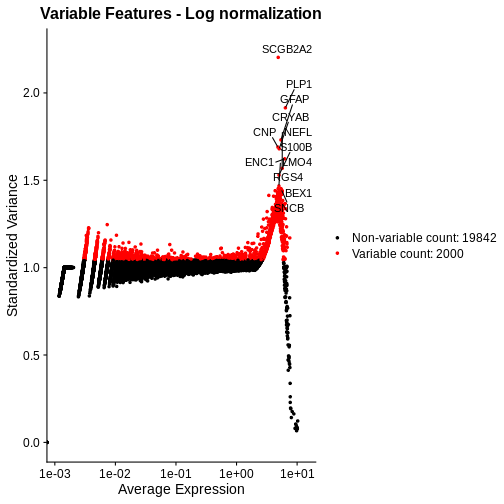

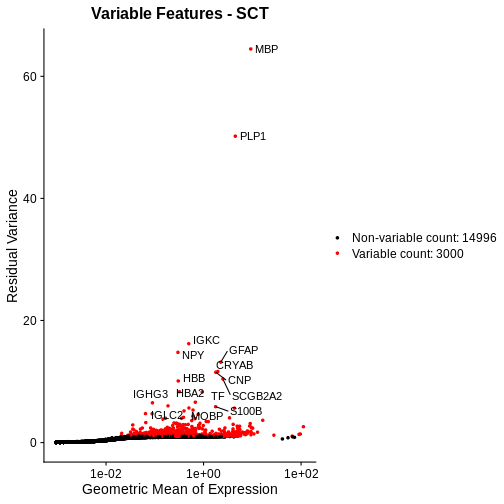

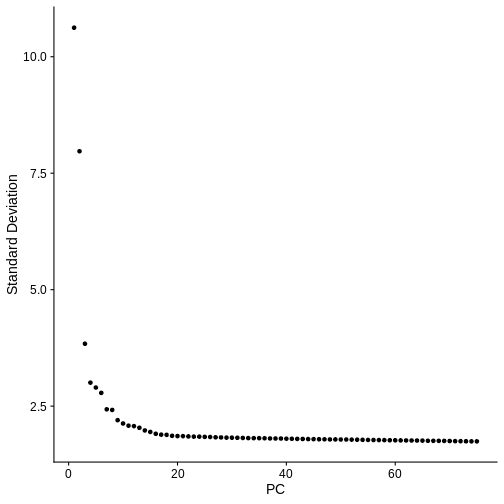

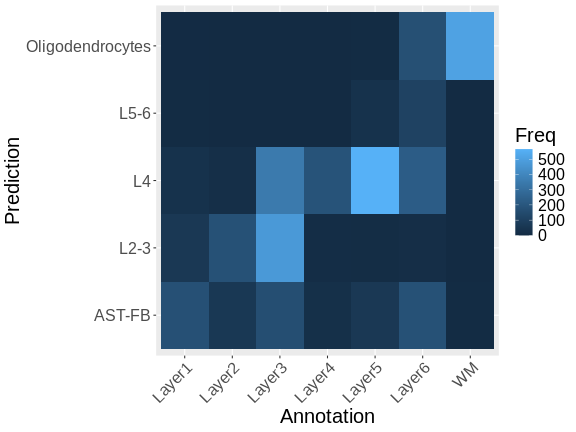

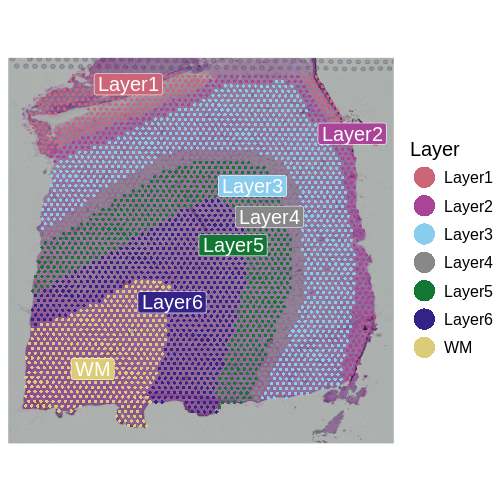

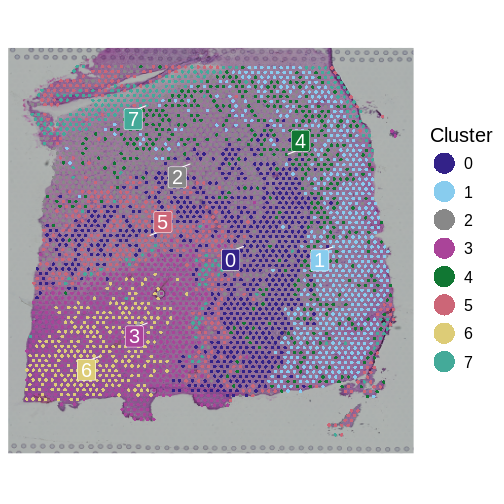

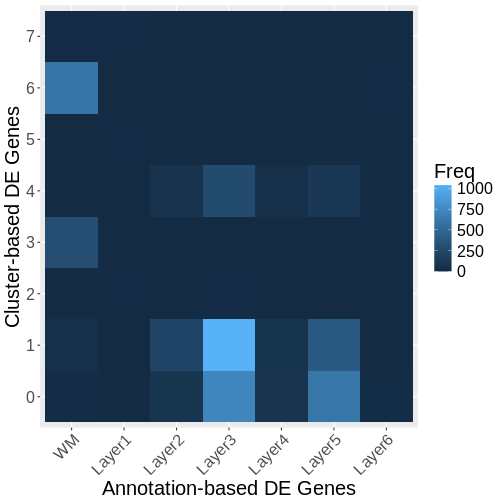

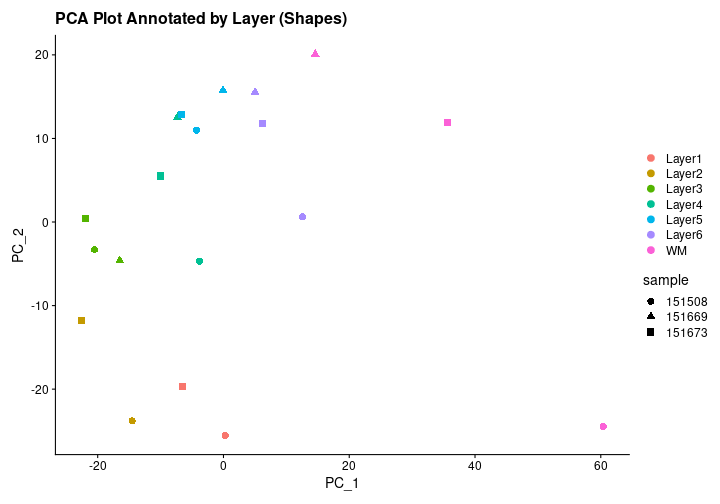

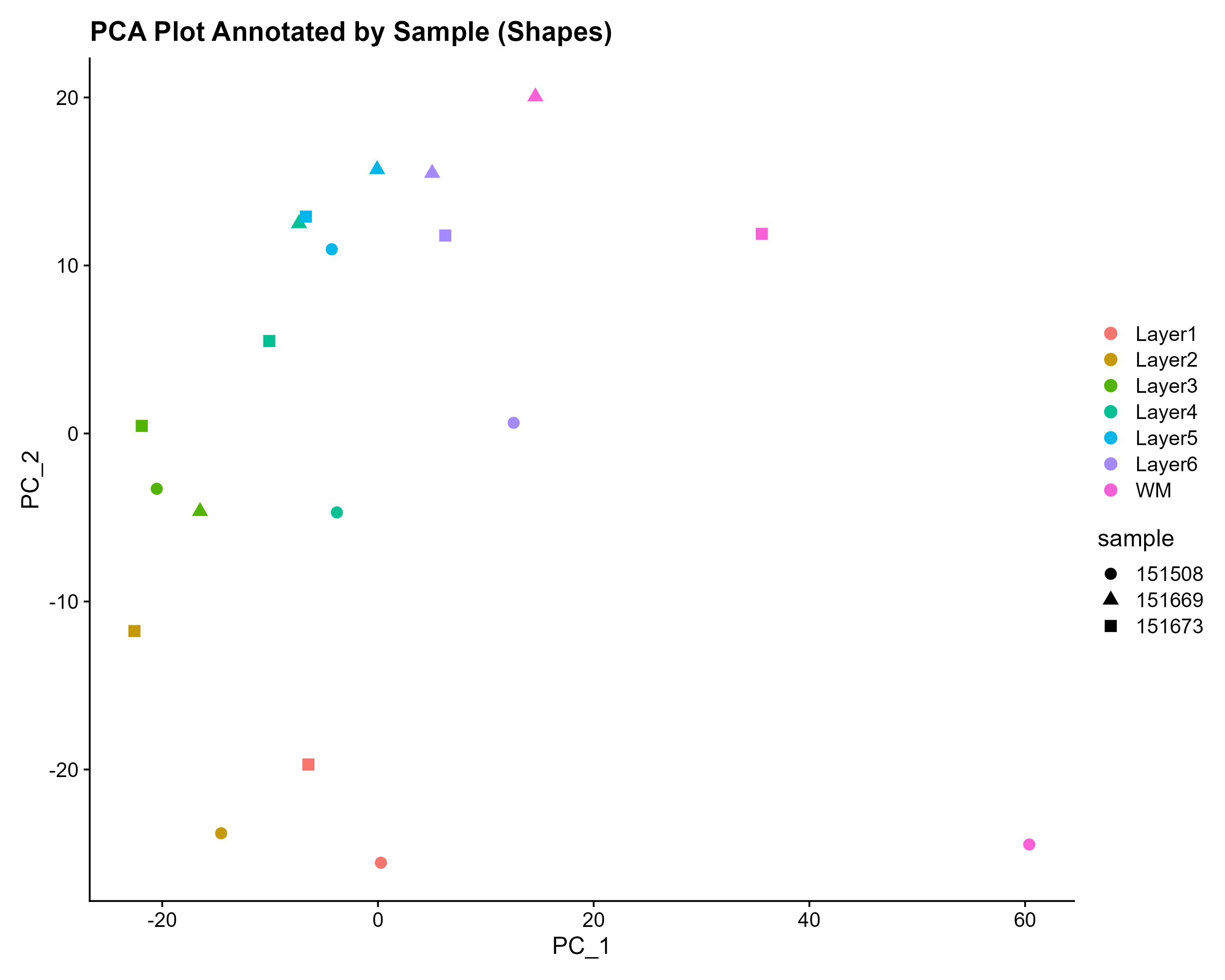

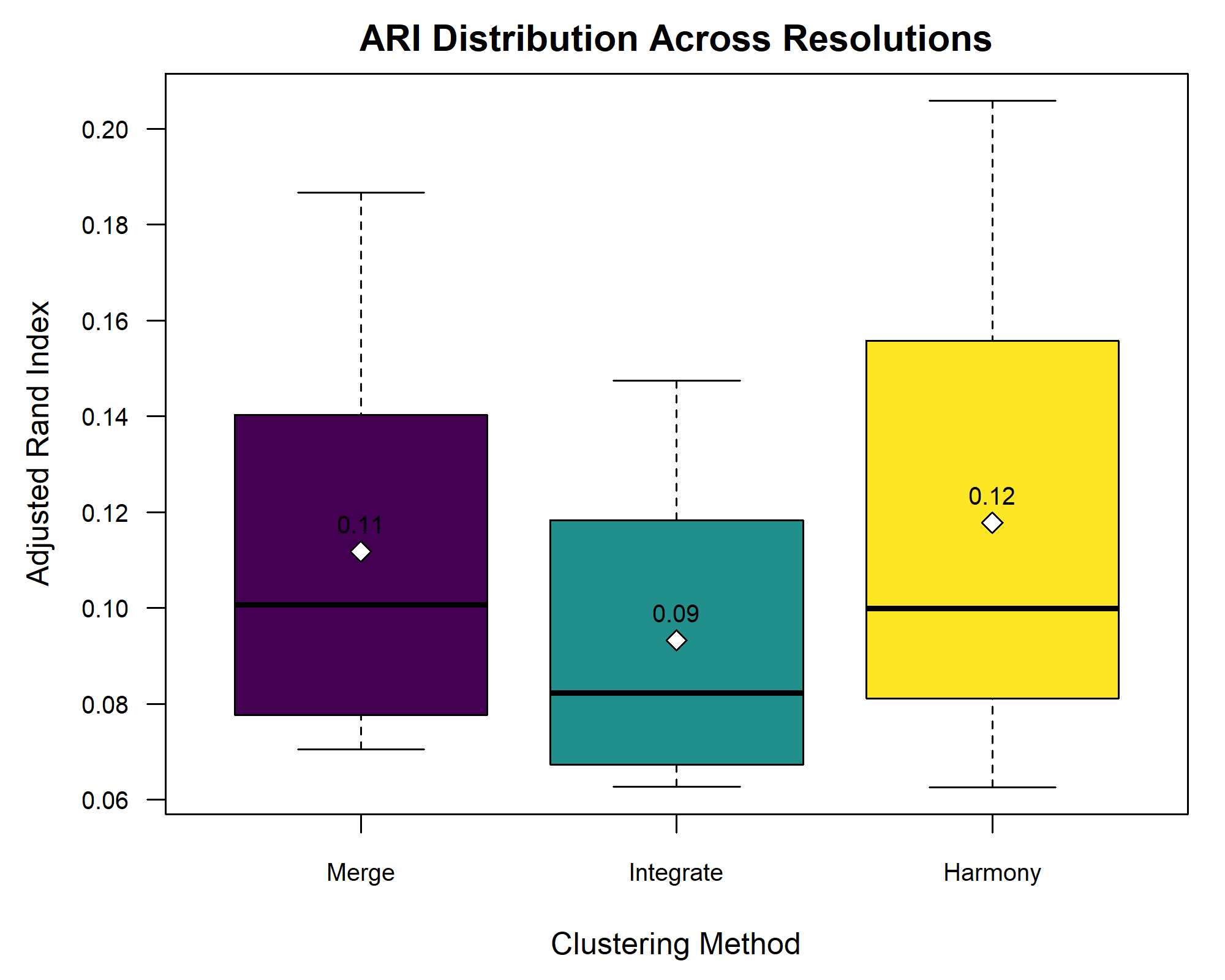

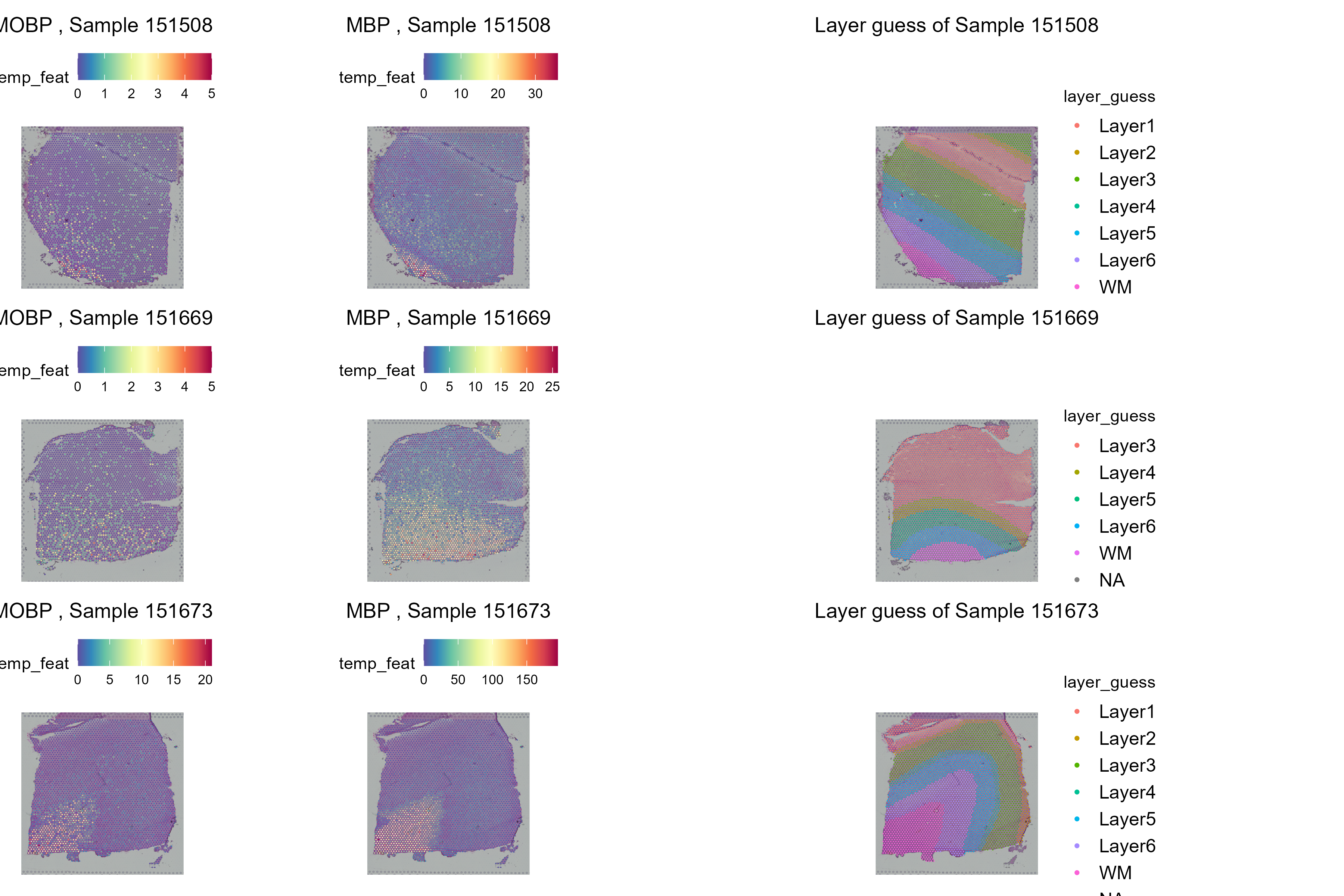

Warning in scale_y_continuous(trans = "log2"): log-2 transformation introduced